Medium

6h

114

Image Credit: Medium

4 Questions to Unlearn What You Know about AI

- The first lecture of the Human and Machine Intelligence discussed AI's foundational questions that challenge our assumptions about AI.

- The lecture challenged foundational questions, including - "Can machines think?", "What is intelligence?" and "Why is AI harder than we think?".

- Examples of generative AI include Google's models that can interpret handwritten equations, GPT-based conversational AI that could help reduce belief in conspiracy theories, and Google Translate that is adept at bridging language barriers.

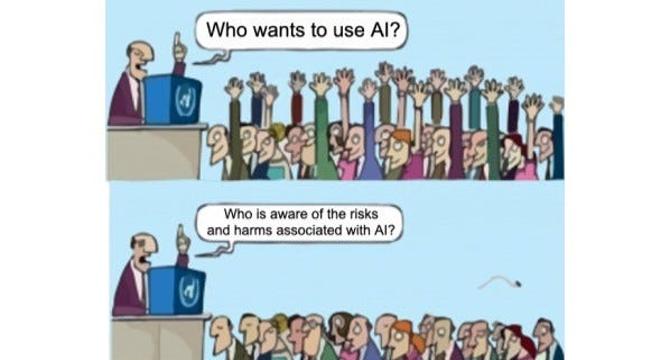

- The class took a closer look at the darker side of AI, which amplifies societal risks, including harmful content creation, voice likeness misuse and bias in dialect recognition.

- Turing proposed a test to evaluate machine intelligence, famously known as the Turing Test, to determine whether machines can behave in ways indistinguishable from humans in specific contexts.

- AI's definition is slippery. Joy Buolamwini defines AI as a "quest" that acknowledges it is a work in progress, avoiding the anthropomorphic language that often misleads the public.

- Melanie Mitchell points out that deep learning systems remain brittle, making unpredictable errors in situations beyond their training data.

- Intelligence isn't just goal-directed behaviour or test performance; it's deeply tied to context, adaptability, and common sense.

- The lecture offers a promising direction from psychology on AI's frontier to develop human-like AI with embodied cognition, offering insights into both AI and human development.

- To navigate the future of AI responsibly, we need to think critically about what AI can achieve and where it will continue to fall short.

Read Full Article

6 Likes

For uninterrupted reading, download the app