Appleinsider

1d

651

Image Credit: Appleinsider

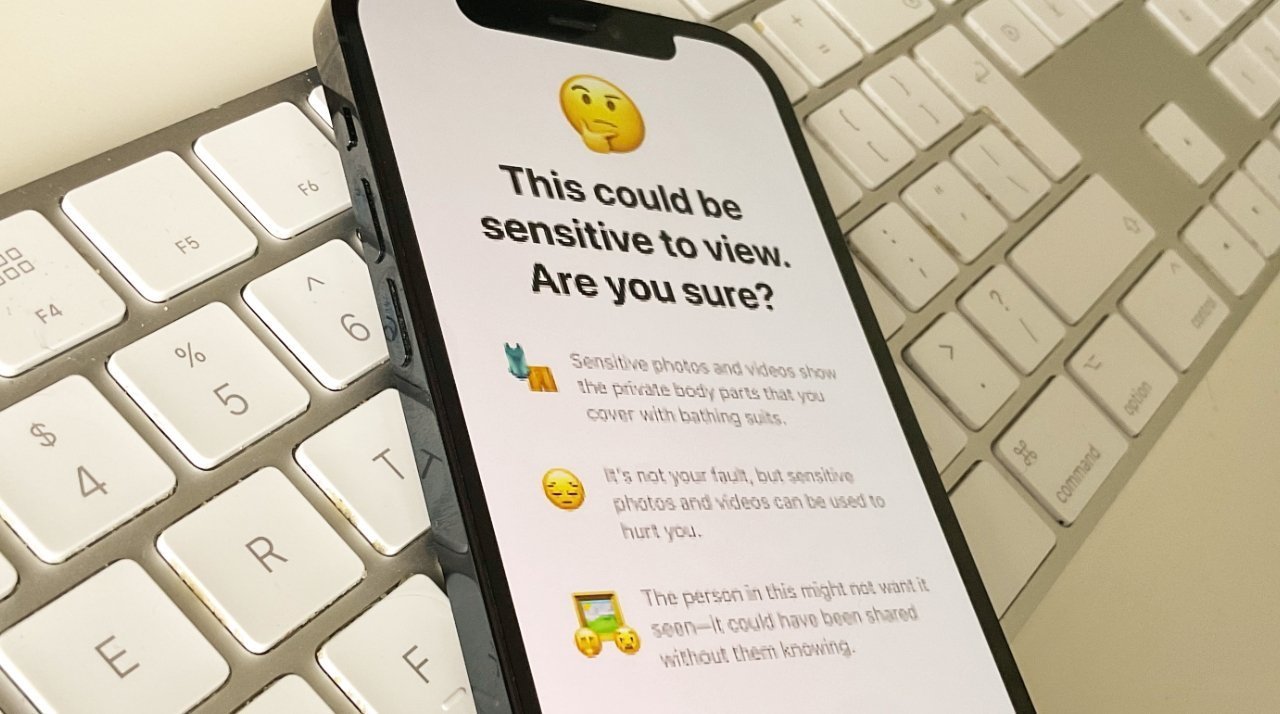

Apple relying on Communication Safety to stem tide of CSAM blackmail

- Apple is using its Communication Safety features to protect potential victims, following reports of teen boys being victimized by blackmailers and dying by suicide.

- Parents can activate Apple's Communication Safety features for a child account to enhance protection.

- The cases of blackmailed teens falling prey to scammers through trickery or fake images made with AI are increasing, leading to tragic outcomes.

- The Wall Street Journal's report profiles young victims who tragically ended their lives due to the threat of explicit images, known as Child Sexual Abuse Material (CSAM), becoming public.

Read Full Article

22 Likes

For uninterrupted reading, download the app