Semiengineering

1M

13

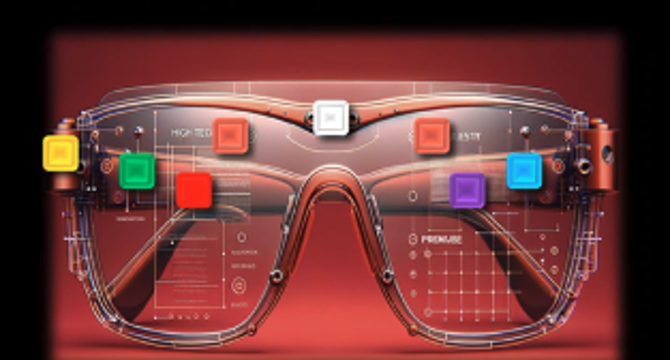

Image Credit: Semiengineering

AR/VR Glasses Taking Shape

- AR/VR glasses are evolving, with devices like Ray-Ban Meta AI glasses relying on tethered smartphones, while Apple Vision Pro offers standalone AR and VR functions but is heavy and cumbersome.

- AR glasses now resemble regular spectacles with cameras and microphones for verbal prompts, simplifying design and enhancing user comfort.

- VR goggles are bulkier and require more processing power for immersive experiences, including high-resolution screens and accurate tracking for reduced motion sickness.

- To enhance functionalities in small glasses, tethering to a phone for compute power remains a simple solution, with mobile phone chipsets driving advancements.

- Edge AI plays a key role in AR/VR glasses, enabling efficient processing and diverse workloads for immersive experiences without compromising battery life.

- Connectivity challenges for tethered devices include determining the best communication standard between the glasses, smartphones, and network towers.

- New wireless standards are being developed to improve bandwidth and latency, with technologies like UWB and combo SoCs supporting AR/VR and gaming applications.

- Short-term solutions like cabled connections may guarantee better performance, but future AR/VR glasses aim for wireless technologies for seamless interactions.

- Edge AI technology contributes to low latency and high determinism for AR/VR glasses, improving human-machine interaction and creating new use cases.

- Enabling 6G through edge AI and compute brings high-performance computing directly to the base station, reducing processing delays and ensuring a seamless VR experience.

- As AR/VR glasses advance from goggles to glasses, AI-driven wearables will offer richer interactions and seamless user experiences.

Read Full Article

Like

For uninterrupted reading, download the app