Pymnts

7d

236

Image Credit: Pymnts

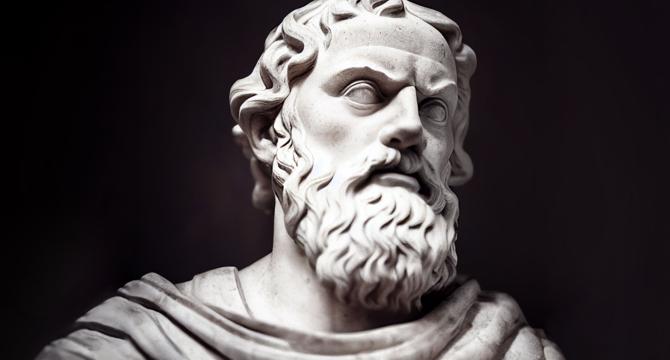

AWS Turns to Ancient Logic to Tackle Modern AI’s Hallucinations

- AWS is using automated reasoning to address AI's challenge of hallucinations, making AI outputs more reliable for industries like finance and health care.

- The revised technique now takes minutes to deploy, helping prevent factual errors caused by generative AI hallucinations.

- Incorrect AI outputs can lead to severe consequences, as seen in cases like Air Canada providing wrong information through its chatbot.

- Automated reasoning, rooted in symbolic logic dating back to Plato and Socrates, provides verifiable truth through mathematical logic.

- While machine learning models can make predictions, automated reasoning offers a more certain and accurate approach.

- Automated reasoning involves converting natural language facts into mathematical models to measure AI responses for accuracy.

- Although automated reasoning has limitations, it can complement other approaches to reduce hallucinations in reasoning AI models.

- AWS has made automated reasoning more accessible through Amazon Bedrock Guardrails, allowing companies to deploy this technique in their AI models.

- Automated reasoning is particularly valuable for regulated industries like pharmaceuticals, ensuring compliance with regulations.

- AWS plans to expand automated reasoning into its AI coding assistant to enhance software security and reliability.

Read Full Article

14 Likes

For uninterrupted reading, download the app