Dev

1M

317

Image Credit: Dev

Build an AI code review assistant with v0.dev, litellm and Agenta

- In this tutorial, we'll build an AI assistant that can analyze PR diffs and provide meaningful code reviews.

- We will be using LiteLLM to handle our interactions with language models and Agenta for instrumenting the code, which helps debug and monitor apps.

- Prompt Engineering is focused on refining prompts and comparing different models using Agenta's playground.

- To evaluate the quality of our AI assistant's reviews and compare prompts and models, we need to set up evaluation using LLM-as-a-judge.

- Deployment is straightforward with Agenta. You can either use Agenta's endpoint or deploy your own app and use the Agenta SDK to fetch the production configuration.

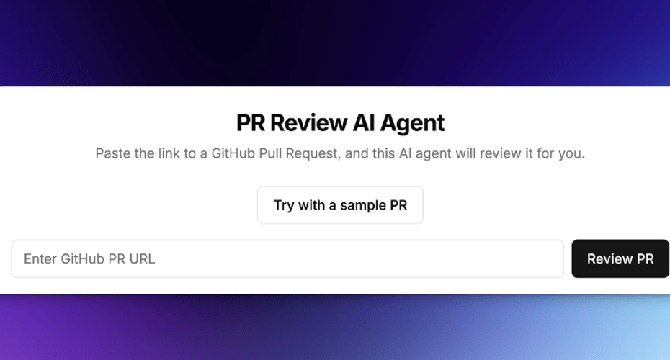

- For the frontend, v0.dev was used to quickly generate a UI.

- Tools such as refinining the Prompt, handling large Diffs, and adding more context can be used to further enhance the AI assistant.

Read Full Article

19 Likes

For uninterrupted reading, download the app