Pyimagesearch

1M

245

Image Credit: Pyimagesearch

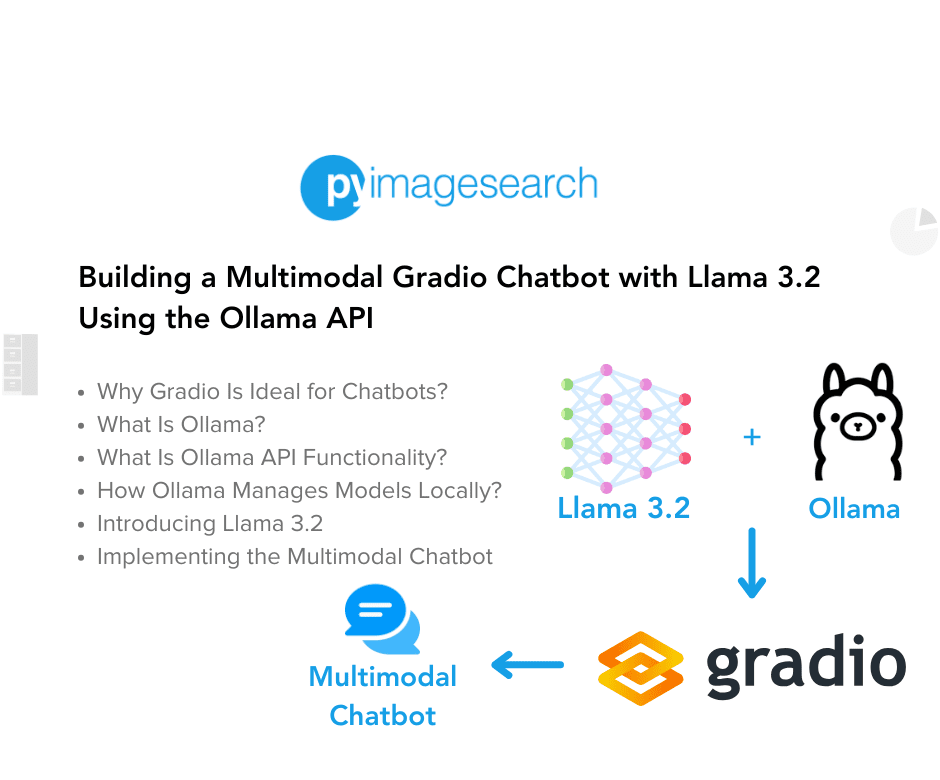

Building a Multimodal Gradio Chatbot with Llama 3.2 Using the Ollama API

- In this tutorial, we will learn how to build an engaging Gradio chatbot powered by Llama 3.2 and Ollama.

- Gradio is an open-source Python library that enables developers to create user-friendly and interactive web applications effortlessly.

- Ollama is an open-source framework that enables developers to run large language models (LLMs) like Llama 3.2 Vision locally on their machines.

- Llama 3.2 represents a significant advancement in large language models (LLMs), introducing multimodal capabilities that enable the processing of both text and images.

- The app.py script is the driver of the multimodal chatbot application. It integrates the Chatbot class from chatbot.py with the Gradio Blocks interface to provide an interactive user interface.

- To follow this guide, you need to have the following Python packages installed: ollama==0.3.3, pillow==11.0.0 (PIL), and gradio==5.5.0.

- The code is modularized across three key files: utils.py, chatbot.py, and app.py. Each file has a specific purpose, making the implementation extensible and easy to understand.

- The result was a sophisticated chatbot capable of generating detailed, concise, or creative responses based on user preferences while maintaining conversation history.

- This project showcased the seamless integration of Gradio, Llama 3.2 Vision, and Ollama’s API, offering developers a practical framework for creating advanced multimodal AI applications.

- In conclusion, this tutorial explains how to build deep learning chatbot with practical examples and detailed walkthrough.

Read Full Article

14 Likes

For uninterrupted reading, download the app