Medium

7d

319

Image Credit: Medium

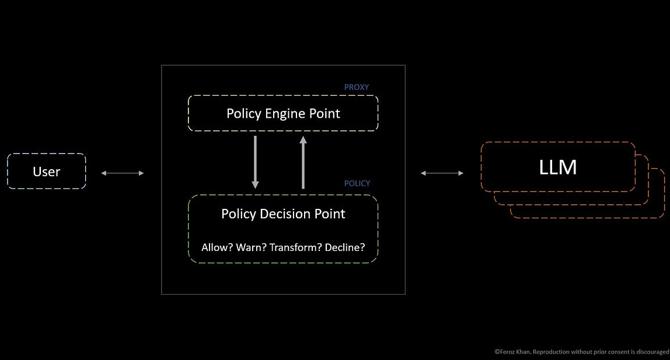

Building Safer LLMs: How Proxy-Based Policy Engines Stop Prompt Injection

- Proxy-based policy engines provide a robust way to protect Language Model Models (LLMs) from threats by inserting a proxy between users and the model.

- Defenses live in the proxy, removing the need to fine-tune every new LLM model version for security risks, and enabling centralized management of multiple models.

- LlamaGuard and PromptGuard are LLM-based classifiers for filtering input/output to detect attacks, while open-source proxy tools like Usage Panda can monitor and control LLM traffic.

- Relying on a proxy-based layer with a policy engine enhances LLM security, ensuring they behave reliably and safely without changing base models.

Read Full Article

19 Likes

For uninterrupted reading, download the app