Tech Radar

14h

313

Image Credit: Tech Radar

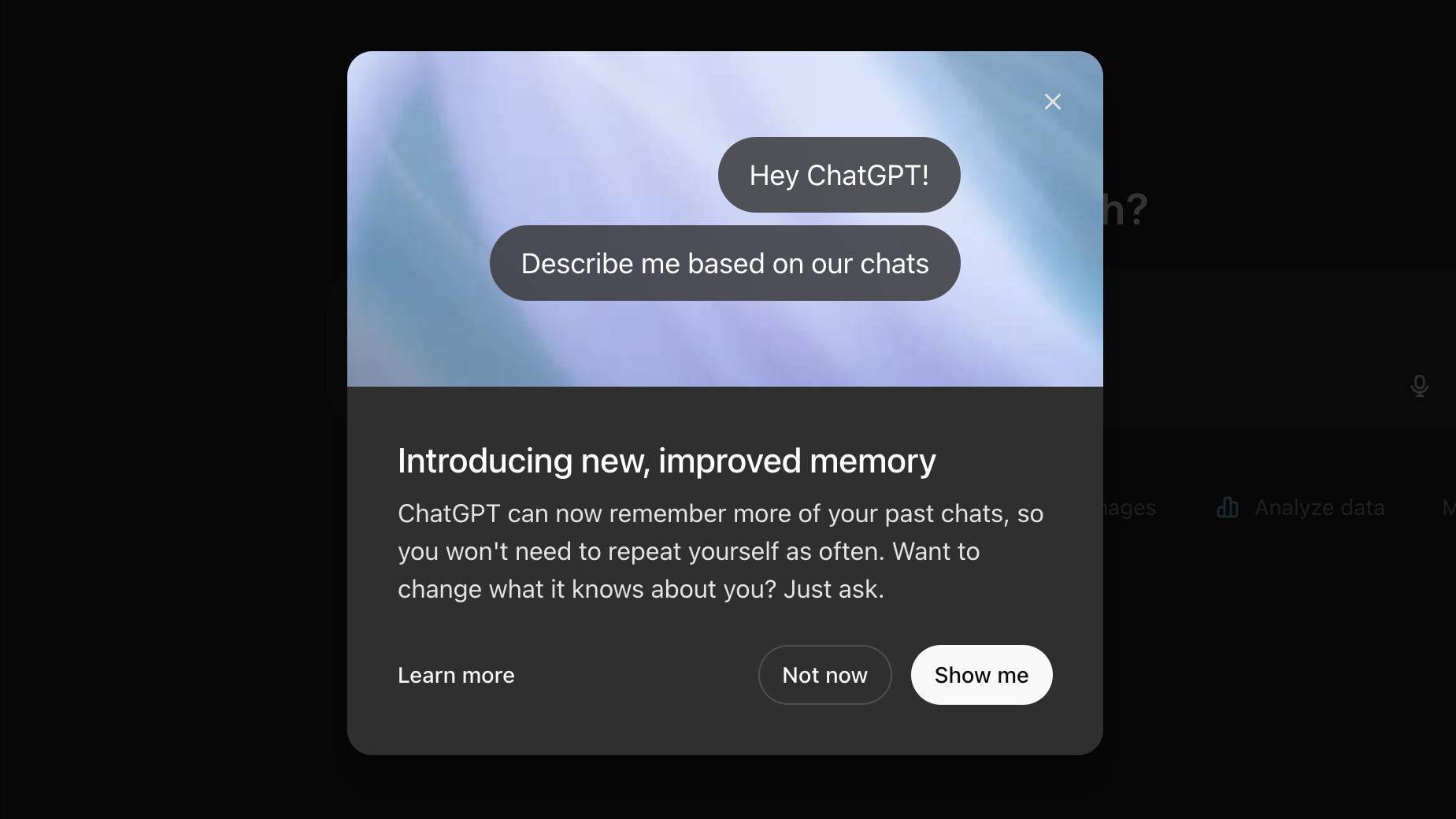

ChatGPT can remember more about you than ever before – should you be worried?

- ChatGPT has undergone a major upgrade to remember information from all past interactions automatically, in addition to manually saved memories by users since 2024.

- This feature, known as long-term memory, aims to enhance personalization and user experience but raises concerns about privacy and emotional dependence.

- The memory feature is available to ChatGPT Plus and Pro users, excluding certain regions due to regulatory constraints.

- The upgrade allows ChatGPT to offer more tailored responses based on a user's history, leading to a more personalized interaction with the AI.

- However, there are worries about the AI's ability to understand context like humans, potentially bringing up irrelevant or uncomfortable past conversations.

- In the workplace, persistent memory could improve project continuity and provide a more tailored assistant experience, but privacy and data security concerns remain prominent.

- Users can manage memories by deleting irrelevant ones, turning off memory features, or utilizing the 'Temporary Chat' option for conversations not influenced by past interactions.

- Different AI tools handle memory differently based on their goals, with some focusing on real-time information retrieval while others emphasize emotional companionship through long-term memory storage.

- The level of control, transparency, and compliance with data protection laws related to AI memory features are critical aspects that need to be addressed for enhanced user trust and privacy.

- The debate surrounding AI memory questions whether the convenience and efficiency it provides outweigh the risks of dependency and potential privacy breaches, emphasizing the intentional design choices made by AI companies.

- AI tools aim to become indispensable and build reliance, but users need to consider the trade-offs between convenience and potential privacy concerns associated with advanced memory capabilities.

Read Full Article

18 Likes

For uninterrupted reading, download the app