Datarobot

1w

342

Image Credit: Datarobot

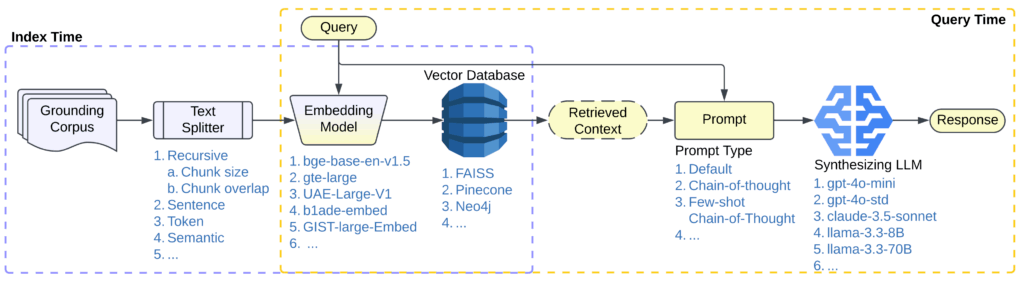

Designing Pareto-optimal GenAI workflows with syftr

- Building effective generative AI workflows, especially agentic ones, involves navigating a vast space of possible configurations, which syftr aims to streamline through Pareto optimization.

- Syftr, an open-source framework, automates the identification of Pareto-optimal workflows that balance accuracy, cost, and latency constraints in generative AI setups.

- By using multi-objective Bayesian Optimization, syftr efficiently explores workflow spaces that manual testing cannot cover, leading to optimal configurations.

- Syftr's approach involves evaluating around 500 workflows per run, with a Pareto Pruner mechanism to halt evaluations unlikely to improve the Pareto frontier, reducing computational costs.

- Traditional model benchmarks are insufficient for understanding complex AI systems, where syftr excels by evaluating entire workflows to capture nuanced trade-offs in larger pipelines.

- Syftr combines with tools like Trace for prompt optimization, offering a two-stage approach for workflow refinement and improved accuracy, particularly in cost-sensitive scenarios.

- The framework's modular design allows easy extension and customization of workflow configurations by users, supporting various optimization strategies.

- Syftr leverages open source libraries like Ray, Optuna, and Hugging Face Datasets, making it flexible for diverse tooling preferences and adaptable to different modeling stacks.

- In a case study on CRAG Sports, syftr outperformed default pipelines, demonstrating significant improvements in accuracy and cost efficiency, showcasing the effectiveness of Pareto optimization.

- Syftr's ongoing research includes meta-learning, multi-agent workflow evaluation, and composability with prompt optimization frameworks to enhance its capabilities further in generative AI design.

Read Full Article

20 Likes

For uninterrupted reading, download the app