Feedspot

2M

115

Image Credit: Feedspot

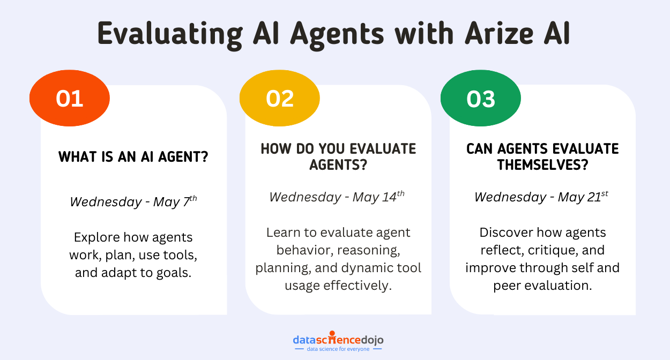

Evaluating AI Agents with Arize AI – A Complete Series to Get You Started!

- AI agents, once considered futuristic fiction, are now a reality, exhibiting advanced machine intelligence and capabilities.

- Evaluating these AI agents has become increasingly difficult due to the complexity of their behavior and reasoning paths.

- Arize AI, with tools like Arize Phoenix, helps AI teams gain visibility into agent functionality, aiding in tracing, debugging, and refining agent behavior.

- The introduction to AI agents sets them apart from standard models by highlighting their active role in decision-making, planning, and goal achievement.

- AI agents require more than just predictive models; they need memory, planning capabilities, tool access, and potential teamwork to function effectively.

- Different agent architectures, such as sequential, hierarchical, and swarm-based, offer varied strengths suited to different tasks and complexities.

- Evaluating AI agents poses challenges like multi-step planning, external tool utilization, and adaptability, requiring diverse evaluation techniques for a comprehensive analysis.

- Core evaluation techniques for AI agents include code-based evaluations, language model assessments, human evaluation, and ground truth comparisons.

- Advanced evaluation techniques focus on understanding an agent's reasoning path, including path-based analysis, convergence measurement, and planning quality assessment.

- The agent-as-judge paradigm introduces self-evaluation and peer review among agents, fostering learning, improvement, and teamwork within automated systems.

Read Full Article

6 Likes

For uninterrupted reading, download the app