Feedspot

2w

56

Image Credit: Feedspot

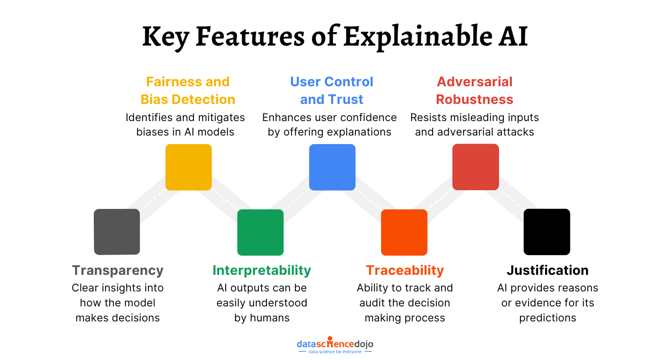

Explainable AI (XAI): The Next Step in Building Trustworthy Artificial Intelligence

- Explainable AI (XAI) addresses the lack of transparency in AI decision-making, making it more accountable and interpretable.

- In healthcare, XAI enhances reliability by explaining specific factors influencing AI diagnostic decisions, like highlighting tumor regions in scans.

- In finance, XAI provides transparency in credit scoring by identifying key financial elements that impact loan approvals, empowering applicants.

- XAI in autonomous vehicles helps decode decision-making processes, improving understanding for manufacturers, regulators, and users.

- XAI mitigates bias in AI models by identifying and addressing factors that influence decisions, aiding in detecting and correcting biases.

- Regulations like GDPR require transparent AI decision-making, making XAI crucial in industries with real-world consequences like healthcare and finance.

- XAI uses techniques like LIME, SHAP, and DeConvNet to demystify AI predictions and enhance transparency across various models and applications.

- Ante-hoc explainable models, like rule-based models and decision trees, are designed for transparency from the start, simplifying decision-making processes.

- Advanced methods in XAI incorporate ideas like neural attention mechanisms to enhance AI performance and transparency in text and image classification.

- Emerging techniques like Langfuse tracers offer real-time insights into complex AI systems, enabling transparent audits and building trust in AI applications.

- Challenges in XAI include the lack of universal metrics for evaluating explanations, specialization of XAI tools, balancing model interpretability with accuracy, and addressing biases effectively.

Read Full Article

3 Likes

For uninterrupted reading, download the app