Medium

2w

304

Image Credit: Medium

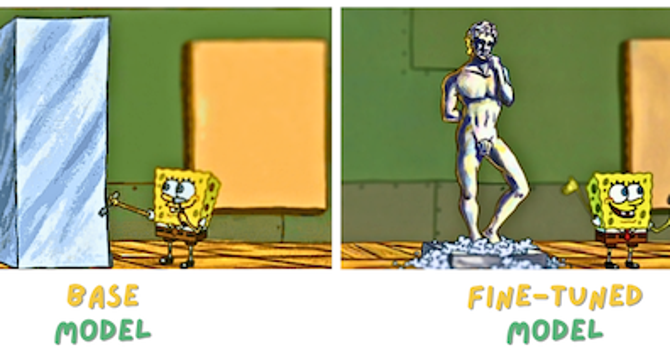

Fine-Tuning, Because Your Model Deserves a Second Chance

- Training Large Language Models (LLMs) involves stages like pre-training and fine-tuning.

- Pre-training starts with acquiring generic knowledge from various sources like web crawls and user records.

- Fine-tuning adjusts a base model towards a specific domain using new data.

- Fine-tuning allows adding domain-specific capabilities without the need for extensive pre-training.

- The quality of data used in fine-tuning significantly impacts the LLM's performance.

- Behavioral Cloning is a common fine-tuning method to mimic provided input-output pairs.

- Fine-tuning requires a balance to avoid over-optimization for performance and limit abstraction abilities.

- Considerations for fine-tuning include model size, architecture, data quality, and compute budget.

- There is no universal formula for determining the exact amount of data needed for fine-tuning.

- Supervised Fine-Tuning (SFT) is a popular way to specialize LLMs, but sometimes Reinforcement Learning with Human Feedback (RLHF) may be more effective.

Read Full Article

18 Likes

For uninterrupted reading, download the app