Unite

4w

71

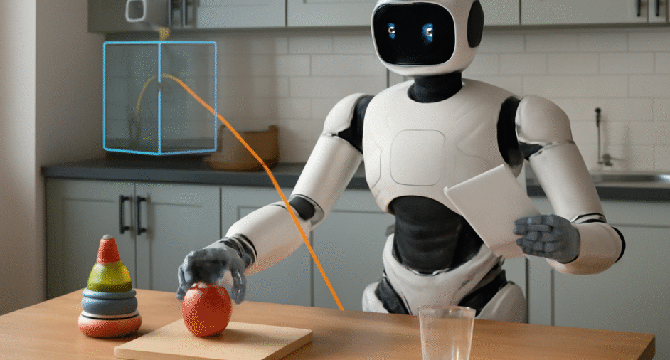

Image Credit: Unite

Gemini Robotics: AI Reasoning Meets the Physical World

- Gemini Robotics is Google's suite of models developed to integrate advanced AI reasoning with the physical world through robotics.

- Built on Gemini 2.0, Gemini Robotics enables robots to execute complex tasks by combining Vision-Language-Action (VLA) capabilities.

- The models excel at generalizing tasks, adapting to varying environments, and handling unforeseen challenges without extensive retraining.

- Embodied reasoning in Gemini Robotics bridges the gap between digital reasoning and physical interaction, enhancing robots' understanding of the real world.

- Key components of embodied reasoning include object detection, grasp prediction, 3D understanding, and dexterity for fine motor skills.

- Gemini Robotics exhibits advanced dexterity for tasks like folding clothes and adapts to new tasks with few-shot learning and novel robot embodiments.

- The models boast zero-shot control through code generation and can rapidly adapt to new tasks in changing environments.

- Gemini Robotics has diverse applications in industries like manufacturing, caregiving, and entertainment, promising a future of versatile and capable robots.

- By combining AI reasoning with physical interaction, Gemini Robotics represents a significant advancement in the field of robotics.

- The suite's features like zero-shot control and few-shot learning pave the way for robots to play integral roles in various sectors, reshaping the future of robotics.

Read Full Article

4 Likes

For uninterrupted reading, download the app