Siliconangle

1w

348

Image Credit: Siliconangle

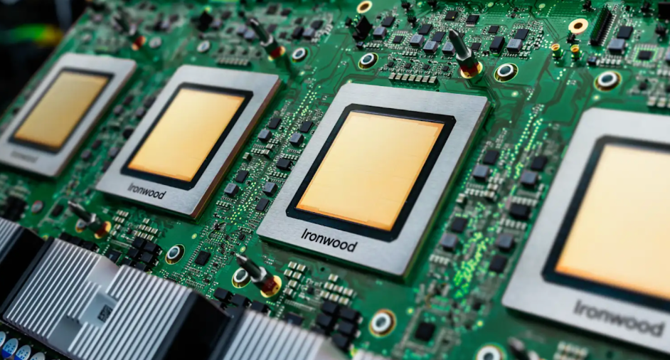

Google’s cloud-based AI Hypercomputer gets new workhorse with Ironwood TPU

- Google Cloud is upgrading its AI Hypercomputer infrastructure stack with the new Ironwood TPU to enhance AI workloads performance, announced at Google Cloud Next 2025.

- Ironwood is Google's seventh-generation TPU designed for advanced AI models, known for handling massive amounts of parallel processing efficiently.

- It is the most powerful TPU accelerator ever built by Google, able to scale to a megacluster of 9,216 liquid-cooled chips connected by advanced Inter-Chip Interconnect technology.

- Ironwood was created to cater to new 'thinking models' with mixture-of-experts techniques, enabling advanced reasoning with minimal data movement and latency.

- With 192 GB high-bandwidth memory per chip, Ironwood provides faster processing and data transfers, enhancing performance significantly.

- Google Cloud customers can choose from two initial configurations of Ironwood clusters: 256-chip or 9,216-chip cluster, with a total performance of 42.5 exaflops at peak performance.

- Ironwood's enhancements include low-latency ICI network, HBM bandwidth boost, and bidirectional bandwidth improvements, offering more rapid data access for memory-intensive workloads.

- Additionally, Google's infrastructure stack includes Nvidia's advanced AI accelerators, hardware improvements like higher-performance block storage, and software updates for better utilization and performance.

- New software features such as Pathways runtime, Cluster Director for workload optimizations, and improved observability tools are added to boost AI infrastructure efficiency.

- Expanded inference capabilities in AI Hypercomputer, including Inference Gateway in GKE and GKE Inference Recommendations tool, aim to simplify infrastructure management and offer optimized performance.

Read Full Article

20 Likes

For uninterrupted reading, download the app