BGR

1M

193

Image Credit: BGR

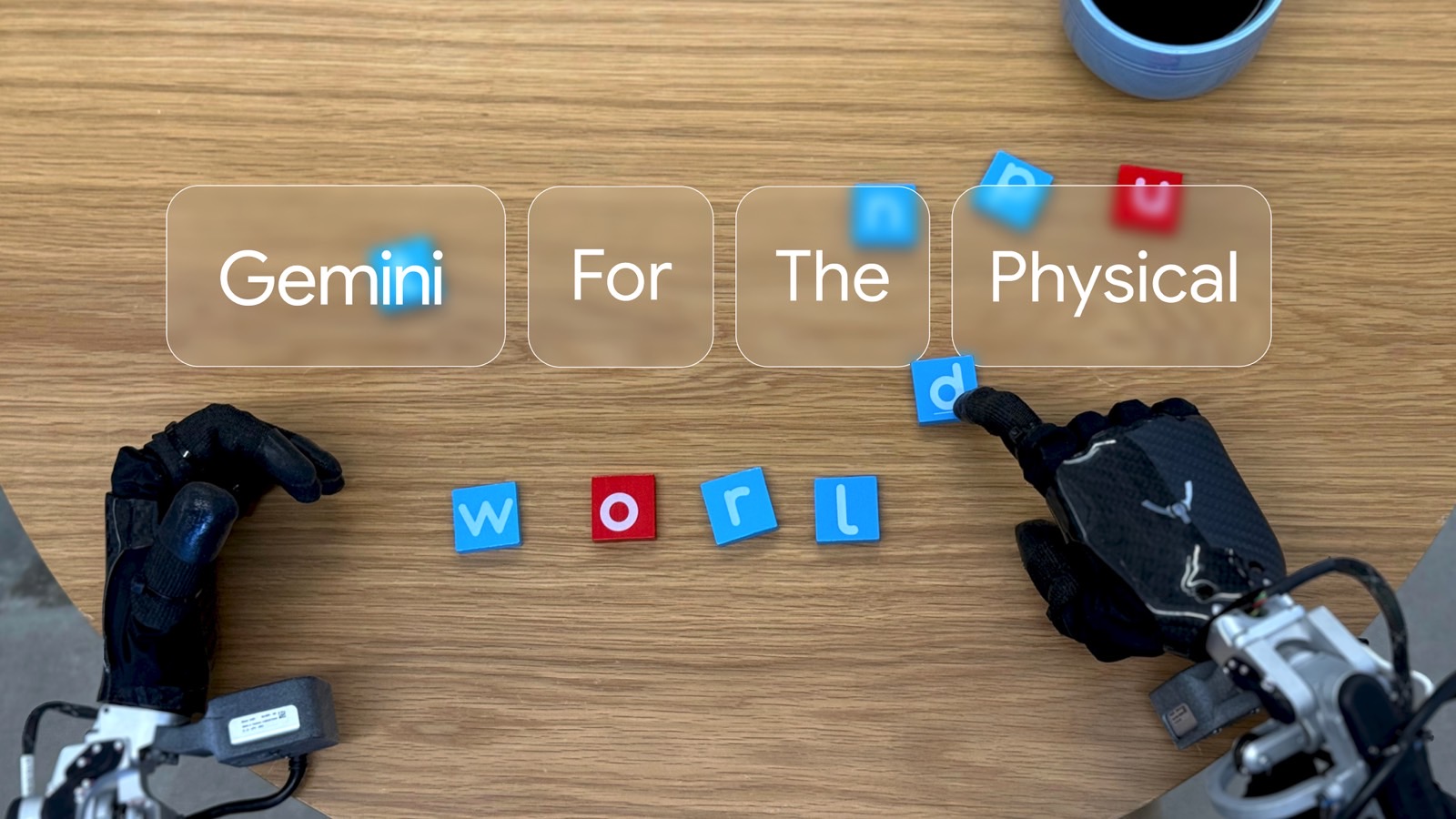

Google’s new Gemini Robotics AI models are blowing my mind

- Google has announced new Gemini Robotics AI models that aim to enhance robots' understanding of human commands, their surroundings, and actions they were not trained on.

- These AI models, similar to the Figure Helix Vision-Language-Action (VLA), will help robots perform tasks given by humans and interact with the physical world.

- While humanoid robot helpers for homes are still in the early stages, Google's Gemini Robotics models lay the groundwork for future advancements in AI robotics.

- The development of AI robots with physical presence to handle real-life objects is an ongoing process, with advancements in areas such as smart glasses and camera-equipped devices.

Read Full Article

11 Likes

For uninterrupted reading, download the app