Livescience

1M

0

Image Credit: Livescience

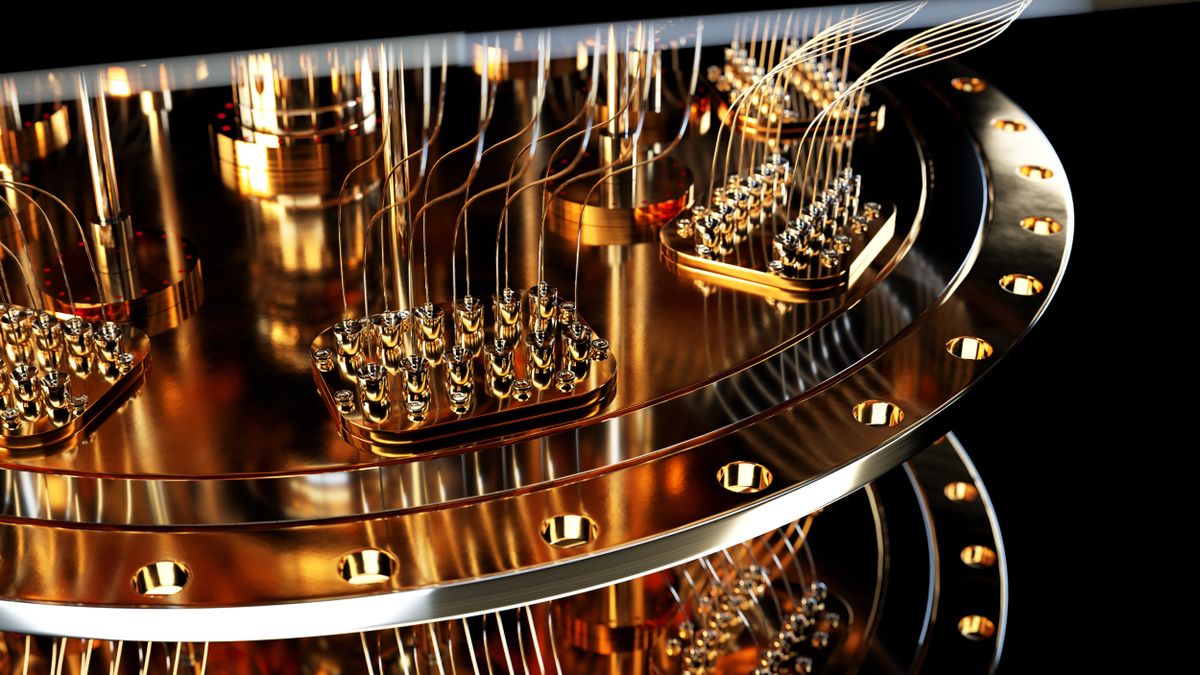

History of quantum computing: 12 key moments that shaped the future of computers

- The idea of quantum computing was introduced by American physicist Paul Benioff in 1980 when he proposed a quantum version of Turing machine using the equations of quantum mechanics.

- The famous physicist Richard Feynman is often credited with popularizing quantum computing with his speech on using computers to simulate physics at the first Physics of Computation Conference in 1981.

- David Deutsch pointed out in 1985 that because the universal computer described by Turing relied on classical physics, it would be unable to simulate a quantum computer. He formulated Turing's work using the principles of quantum mechanics to create a Universal quantum computer.

- In 1991, American mathematician Peter Shor introduced the first killer use case for quantum computers: a quantum algorithm that could efficiently factorize large numbers.

- Bell Labs computer scientist Lov Grover proposed a quantum algorithm for unstructured search in 1996. The Grover algorithm uses the quantum phenomenon of superposition to speed up the search process.

- IBM researcher Isaac Chuang and his team made a breakthrough by implementing Grover's algorithm on a computer featuring two qubits in 1998. They also led the first implementation of Shor's algorithm three years later on a seven-qubit processor.

- In 1999, physicists at Japanese technology company NEC used superconducting circuits to create qubits, which are now used by many of the leading quantum computing companies, including Google and IBM.

- D-Wave, a Canadian company, launched the first commercially available quantum computer, D-Wave One, in May 2011. The device featured 128 superconducting qubits but was not a universal quantum computer.

- IBM made its five-qubit processor available over the cloud in May 2016, allowing people from outside the company to run quantum computing jobs on its hardware.

- Google claimed to achieve quantum supremacy in September 2019 when it used 53 qubits to perform a calculation in 200 seconds that it claimed would take a supercomputer roughly 10,000 years to complete.

- A group from the Chinese Academy of Sciences and other institutions showed in 2022 that Google’s claim was overstated by devising a classical algorithm that could simulate Google’s quantum operations in just 15 hours on 512 GPU chips.

- In 2023, Harvard researchers working with start-up QuEra achieved a major milestone by generating 48 logical qubits at once – 10 times more than anyone had previously achieved.

Read Full Article

Like

For uninterrupted reading, download the app