Byte Byte Go

1M

109

Image Credit: Byte Byte Go

How Canva Collects 25 Billion Events a Day

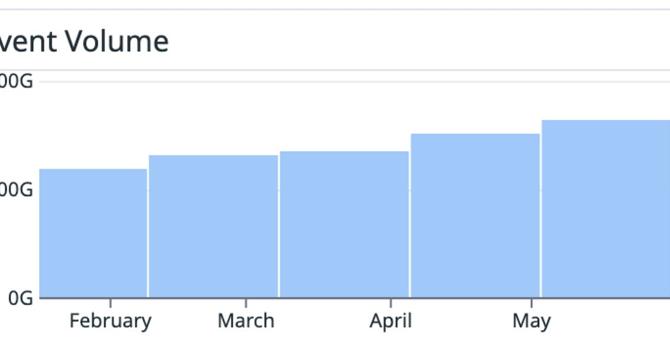

- Canva collects 25 billion events daily, translating user actions into events for analysis, emphasizing plumbing over dashboards.

- Canva's schema governance, batch compression, and router architecture help manage the flow of events efficiently.

- The analytics pipeline at Canva is structured into three core stages: Structure, Collect, and Distribute.

- Canva maintains strict Protobuf schemas for all events, ensuring forward and backward compatibility, with Datumgen enforcing schema rules.

- Ingestion at Canva is streamlined with a unified client and AWS Kinesis-backed pipeline for asynchronous enrichment and ingestion buffering.

- Canva's ingestion endpoint validates events against schemas, enriches data, and forwards it to downstream consumers via Kinesis streams.

- Decoupled routing in Canva's pipeline ensures events are delivered efficiently to different destinations like Snowflake, Kinesis, and SQS Queues.

- Infrastructure cost optimization at Canva includes transitioning to Kinesis for cost reduction, batch compression for savings, and using SQS as a fallback.

- Canva's pipeline aligns fundamentals like strict schemas, smart batching, and decoupled services to handle billions of events reliably and cost-effectively.

- Building for reliability, cost efficiency, and long-term clarity is highlighted as the key lesson for scaling analytics effectively.

- Canva's approach demonstrates the importance of balancing simplicity and flexibility in designing systems for high-scale event collection and analysis.

Read Full Article

6 Likes

For uninterrupted reading, download the app