Hackernoon

2M

321

Image Credit: Hackernoon

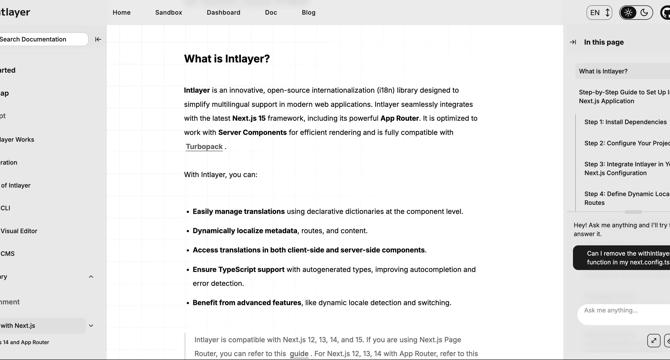

How to Build a Smart Documentation - Based on OpenAI Embeddings (Chunking, Indexing, and Searching)

- The article discusses building a 'smart documentation' chatbot by indexing documentation into manageable chunks, generating embeddings with OpenAI, and performing similarity search.

- The purpose is to create a chatbot that can provide answers from documentation based on user queries, using Markdown files as an example.

- The solution involves three main parts: reading documentation files, indexing the documentation through chunking and embedding, and searching the documentation.

- Documentation files can be scanned from a folder or fetched from a database or CMS.

- Indexing involves chunking documents, generating vector embeddings for each chunk, and storing embeddings locally.

- Chunking is vital to prevent data exceeding model limits, while overlap ensures context continuity between chunks.

- Vector embeddings from OpenAI are used for similarity searches between user queries and document chunks.

- Cosine similarity is calculated to filter relevant document chunks based on user queries.

- A small Express.js endpoint integrates OpenAI's Chat API to generate responses based on the most relevant document chunks.

- The article provides code snippets and explains the process step by step, offering a template for building a chatbot with a chat-like interface.

Read Full Article

19 Likes

For uninterrupted reading, download the app