Byte Byte Go

1M

134

Image Credit: Byte Byte Go

How Uber Eats Handles Billions of Daily Search Queries

- Uber Eats aimed to expand merchant availability by introducing new business lines like groceries, retail, and package delivery without compromising latency or quality.

- The search functionality across various surfaces like home feed, search, suggestions, and ads had to accommodate the increased scale efficiently.

- Challenges included dealing with vertical and geographic expansion, search scale, and latency pressure, which required a complete overhaul of the search platform.

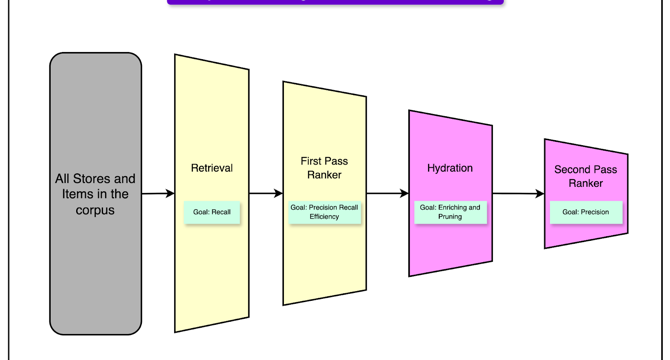

- Uber Eats' multi-stage search architecture focused on ingestion, indexing, retrieval, ranking, and query execution, optimizing each layer for scalability and performance.

- Ingestion paths involved batch and streaming processes alongside priority-aware ingestion to handle various types of updates efficiently.

- The retrieval layer focused on recall-based retrieval and geo-aware matching, while ranking involved lexical matching, fast filtering, and efficiency-focused processing.

- Index layout optimizations, hex sharding strategies, and ETA-aware range indexing were key solutions implemented to improve performance, latency, and relevance in search results.

- Improvements in indexing layouts led to significant reductions in retrieval and P95 latency, along with decreased index size, enhancing system efficiency.

- Lessons learned from scaling Uber Eats' search system include the importance of document organization, sharding strategies, storage access optimizations, and observability for system-wide improvements.

- Aligning document layouts with query behavior, optimizing sharding strategies, and leveraging parallel processes for ETA-based queries were crucial for handling system scalability.

Read Full Article

3 Likes

For uninterrupted reading, download the app