Dev

2w

64

Image Credit: Dev

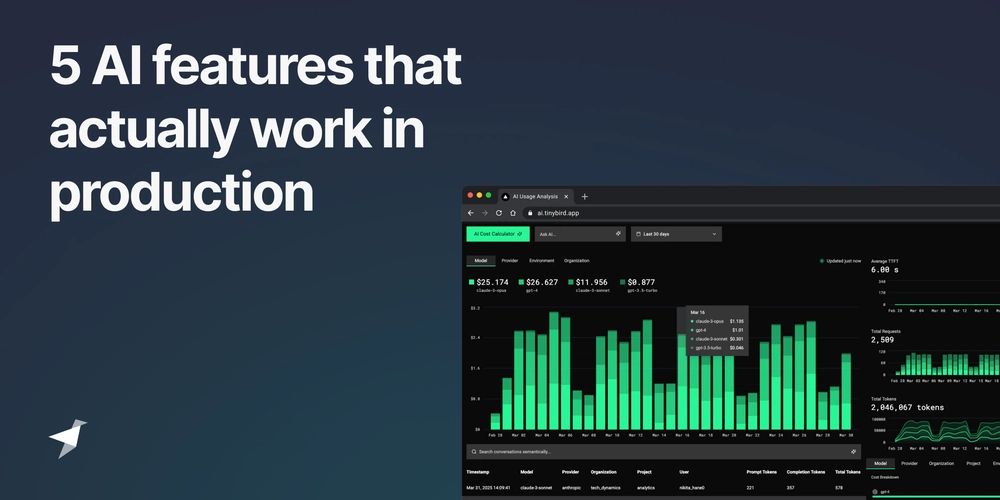

Hype v. Reality: 5 AI features that work in production

- The AI ecosystem is becoming fragmented with various frameworks; however, AI developers focused on building production-ready AI features are gaining importance.

- Vector search, a technique to find similar items in a database, is practical for real use case implementations in AI.

- Implementing vector search involves calculating embeddings for data, storing them in a database, and using a vector search engine to match queries.

- Filtering dashboards with AI using free-text filters like LLM enables quick and efficient data exploration in real-time.

- Visualizing data with AI through free-text queries provides a flexible and customizable experience for users instead of predefined dashboards.

- Auto-fix with AI and explain with AI features leverage LLM models to fix errors and provide explanations, respectively, improving developer productivity and support.

- Creating a system prompt, gathering context, and using LLM queries are common steps in implementing features like filter, visualize, auto-fix, and explain with AI.

- By making documentation LLM-friendly and integrating with AI agents, technical companies can enhance support services and streamline workflow processes.

- Building practical AI features instead of focusing solely on hype can bring tangible value to users, showcasing the importance of implementation over theoretical concepts.

- Demo of AI Usage Analysis app offers insights into leveraging AI features effectively, with Tinybird serving as the analytics backend for implementing these functionalities.

Read Full Article

3 Likes

For uninterrupted reading, download the app