Medium

4w

438

Image Credit: Medium

I tested out all of the best language models for frontend development. One model stood out.

- DeepSeek V3 and Google's Gemini 2.5 Pro outperformed other language models in benchmark tests for frontend development.

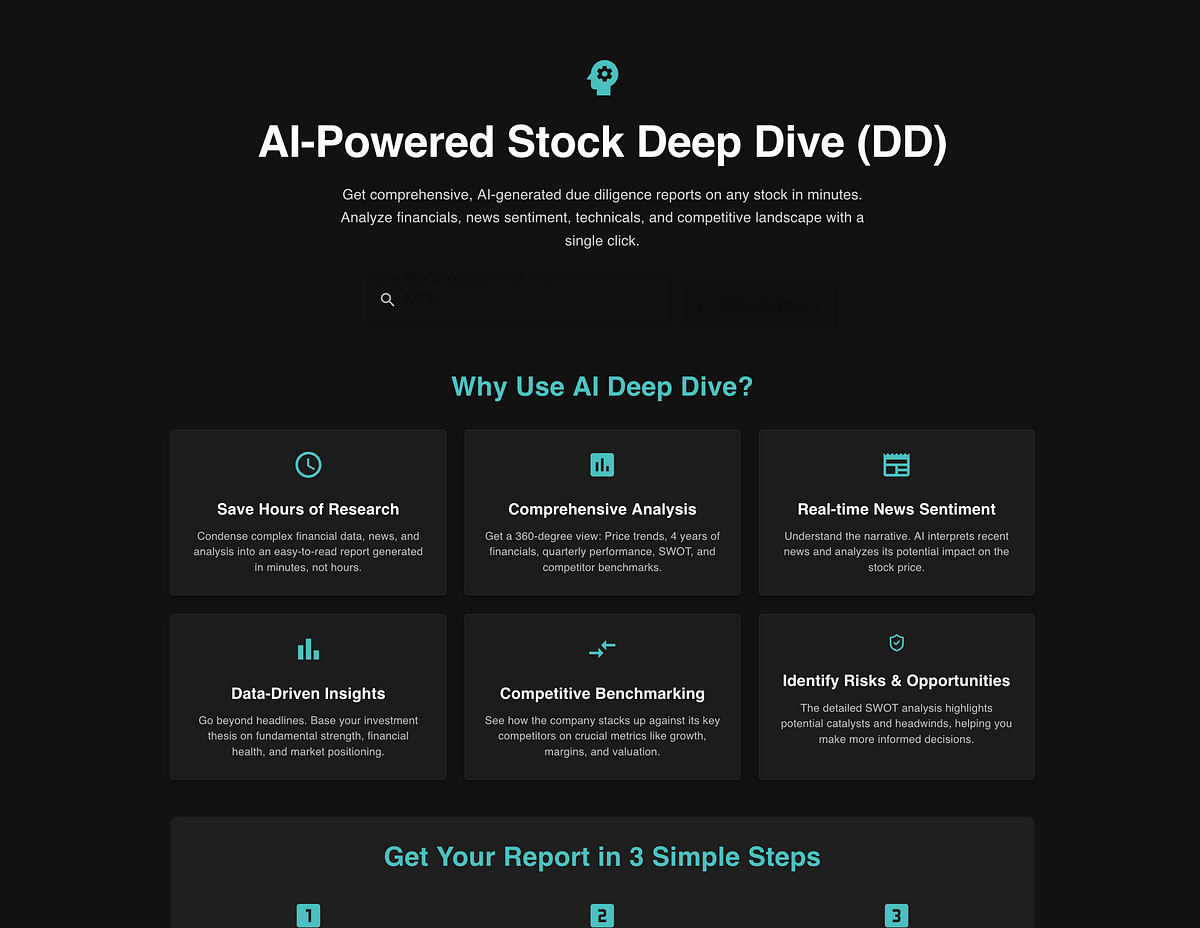

- The article explores how these models perform on a frontend development task related to building an algorithmic trading platform feature called 'Deep Dives'.

- Language models like Grok 3, Gemini 2.5 Pro, DeepSeek V3, and Claude 3.7 Sonnet were tested for generating a landing page for the feature.

- Grok 3 produced basic output, while Gemini 2.5 Pro excelled, ultimately outperformed by DeepSeek V3 and Claude 3.7 Sonnet.

- Claude 3.7 Sonnet stood out by delivering sophisticated frontend landing pages with new components and SEO-optimized content.

- All models produced clean, readable code, but differences in visual elements and code quality were notable.

- Gemini 2.5 Pro and Claude 3.7 Sonnet utilized templates effectively, while Claude excelled in producing high-quality, maintainable code.

- While Claude 3.7 Sonnet was top in code quality, factors like manual cleanup, cost-performance trade-offs, and model capabilities influenced the choice.

- The comparison shows AI's advancement in handling complex frontend tasks, with Claude 3.7 Sonnet emerging as the preferred model for quality and design aesthetics.

- Ultimately, the choice of a language model depends on priorities such as quality, speed, and cost in frontend development workflow.

- The article highlights the progress in AI's frontend development capabilities, emphasizing Claude 3.7 Sonnet's superior output balanced with human oversight needs.

Read Full Article

26 Likes

For uninterrupted reading, download the app