Eletimes

7d

341

Image Credit: Eletimes

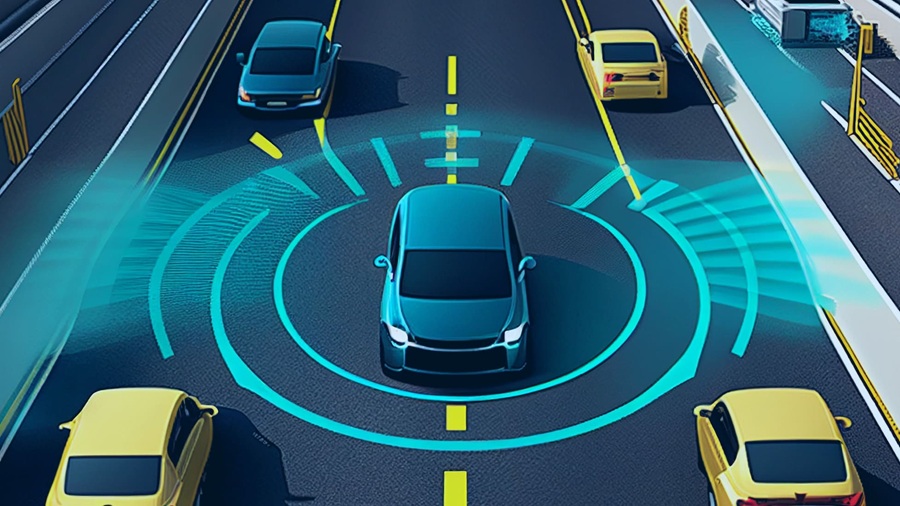

Inside the AI & Sensor Technology Underpinning Level 3 Driving: Beyond ADAS

- The transition in the automobile industry from conventional ADAS to Level 3 autonomous driving is driven by advancements in artificial intelligence, sensor fusion, edge computing, and functional safety.

- Level 3 autonomy allows the vehicle to take over dynamic driving responsibilities under specific conditions, unlike Level 2 which requires continuous driver supervision.

- Level 3 deployments are being approved in countries like China, Japan, and Germany, requiring robust technical foundations for real-time decision-making and sensor redundancy.

- Level 3 vehicles utilize high-performance SoCs for centralized computing, combining cameras, Radar, and LiDAR for sensor fusion and environmental perception.

- Safety measures in Level 3 include redundant actuation systems, adherence to ISO 26262 standards, and AI-driven perception, prediction, and planning functionalities.

- Localization with SLAM and HD maps, real-time inference with edge AI, and challenges like regulatory inconsistencies, cost, cybersecurity, and driver handover are crucial aspects in Level 3 development.

- Level 3 autonomy represents a significant advancement in automotive engineering, setting the stage for future full autonomy and emphasizing the collaboration of AI, mechatronics, embedded systems, and regulatory science.

- However, regulatory variations across regions, cost implications, cybersecurity concerns, and driver handover challenges remain key hurdles in the widespread implementation of Level 3 systems.

- The evolution towards Level 3 driving showcases the potential for AI-driven technologies to revolutionize the automotive industry, promising a future where vehicles can operate autonomously with supervised control.

Read Full Article

20 Likes

For uninterrupted reading, download the app