Feedspot

2M

151

Image Credit: Feedspot

LLM Observability and Monitoring: The Key to Building Reliable and Secure AI Applications

- LLM observability and monitoring are crucial for building reliable and secure AI applications, as highlighted by incidents like Air Canada's chatbot misinforming a passenger.

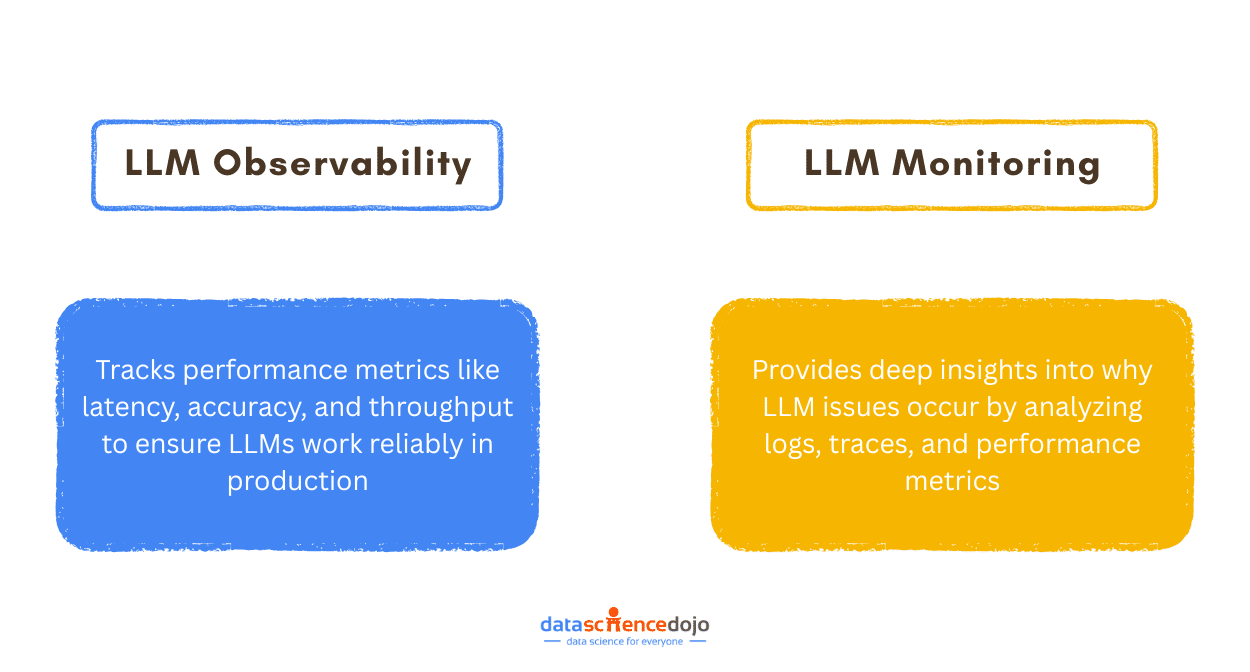

- Monitoring tracks the behavior and performance of AI models, while observability delves deeper into diagnosing issues by analyzing logs, metrics, and traces.

- LLM monitoring focuses on assessing if the model is functioning correctly, while observability goes beyond by explaining the 'why' behind issues, enabling root cause analysis.

- Tracking key metrics like response time, token usage, and requests per second is essential for optimizing the efficiency and reliability of LLMs.

- Observability tools like logs, traces, and metrics help in identifying the root causes of issues, such as inaccurate responses or latency problems, enabling efficient troubleshooting.

- LLMs without proper monitoring and observability can lead to risks like prompt injection attacks, incorrect responses, and privacy breaches.

- Continuous monitoring of responses and user feedback is critical for maintaining accuracy and relevance, especially in high-stakes domains like healthcare and legal services.

- LLM monitoring and observability help in early detection of glitches, optimizing costs, improving user experiences, and maintaining system security.

- Investing in monitoring and observability practices ensures reliability, scalability, and trustworthiness in AI systems, ultimately leading to better performance and user satisfaction.

- Observability and monitoring are vital for the future of AI applications, especially as we advance towards more agentic AI systems that require real-time tracking and diagnostics.

- Strong monitoring and observability practices are essential to ensure the long-term success and evolution of AI systems, separating those that simply work from those that excel.

Read Full Article

9 Likes

For uninterrupted reading, download the app