Dev

2w

112

Image Credit: Dev

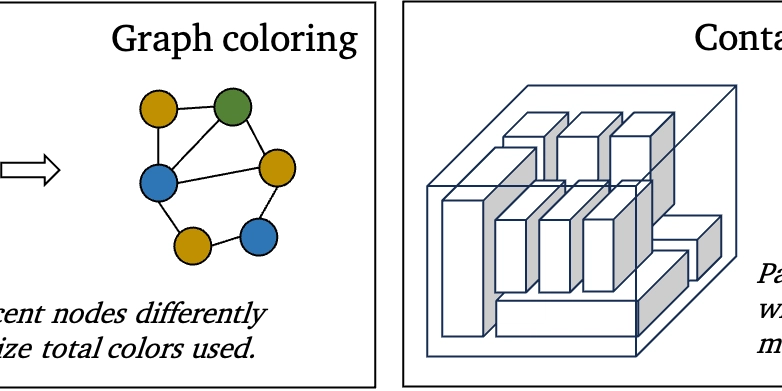

LLMs vs. Optimization: AI Struggles, Teams Excel - New CO-Bench Benchmark Reveals Gaps

- CO-Bench is a new testing framework that measures how well AI language models can solve complex optimization problems.

- Results from CO-Bench reveal that language models have difficulties with algorithm design.

- However, the study also shows that collaboration among multiple AI agents can improve overall performance across various tasks.

- The benchmark evaluated four language models: GPT-4, Claude 3, Gemini, and Llama 3.

Read Full Article

6 Likes

For uninterrupted reading, download the app