Dev

1M

126

Image Credit: Dev

LocalLLMClient: A Swift Package for Local LLMs Using llama.cpp and MLX

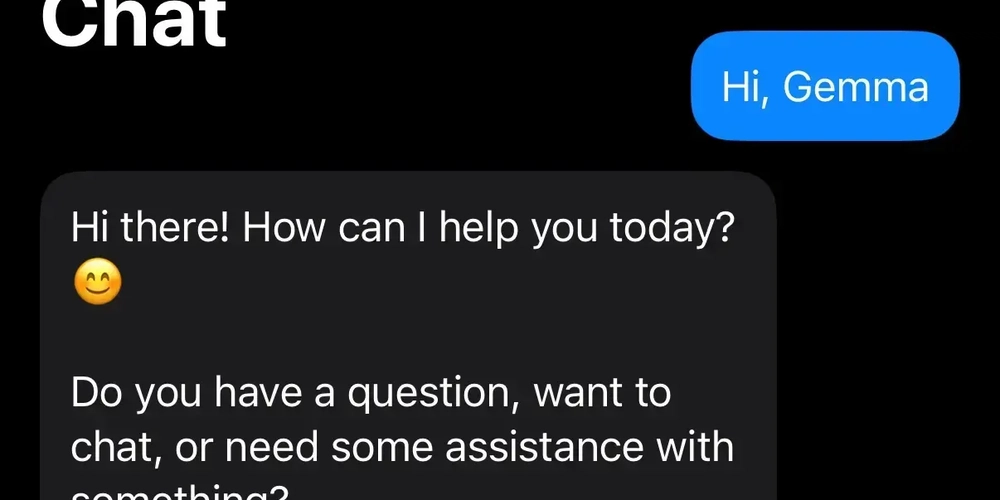

- LocalLLMClient is a Swift package that enables the use of local Large Language Models (LLMs) on Apple platforms.

- It supports models like MobileVLM-3B (llama.cpp) and Qwen2.5 VL 3B (MLX) for iOS and macOS.

- Features of LocalLLMClient include GGUF / MLX model support, iOS and macOS compatibility, streaming API, and experimental multimodal support.

- The library leverages llama.cpp and Apple MLX backends with a unified interface, allowing interactions with LLMs.

- Basic usage involves importing the necessary components, downloading models from Hugging Face, and generating text using specified parameters.

- Text generation examples demonstrate creating stories with a cat protagonist and generating song lyrics from image inputs.

- Additional features in LocalLLMClientUtility like FileDownloader offer functionalities for managing model downloads efficiently.

- The project emphasizes on-device processing for privacy and cost-effectiveness, thanking the community for supporting local LLM development.

- Overall, LocalLLMClient aims to provide a convenient option for Swift developers wanting to experiment with AI on Apple platforms.

- Supporting the project through contributions or stars on GitHub is encouraged.

Read Full Article

7 Likes

For uninterrupted reading, download the app