Fb

4w

18

Image Credit: Fb

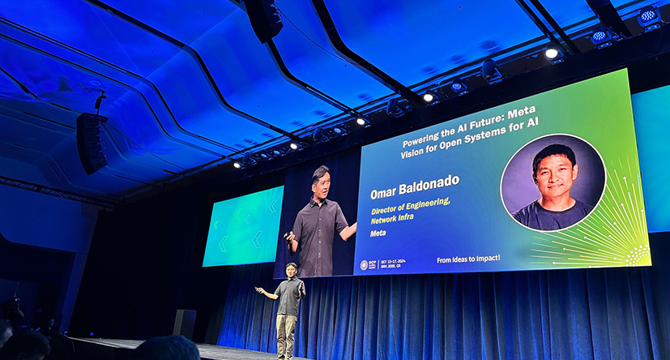

Meta’s open AI hardware vision

- Meta showcased their open AI hardware designs, including a new AI platform, advanced open rack design, and network fabrics and components at the Open Compute Project (OCP).

- Their increasingly large AI workloads have pushed their infrastructure to operate across more than 16,000 NVIDIA H100 GPUs, growing to two 24K-GPU clusters, requiring a high-performance, multi-tier, non-blocking network fabric that can utilize modern congestion control.

- Meta expects the amount of compute needed for AI training will grow significantly from where they are today.

- To support this growth, they need open hardware solutions to develop new architectures, network fabrics, and system designs.

- They announced their new high-powered rack designed for AI workloads, Catalina, and have expanded their Grand Teton platform to support the AMD Instinct MI300X. They have also developed the Open Disaggregated Scheduled Fabric to overcome limitations in scale, component supply options, and power density.

- Meta is committed to open-source AI and believes prioritizing open and standardized models and frameworks alongside open AI hardware systems is essential for AI advancements.

- Meta encourages those passionate about building the future of AI to engage with the OCP community to unlock the true promise of open AI for everyone.

Read Full Article

1 Like

For uninterrupted reading, download the app