VentureBeat

4w

89

Image Credit: VentureBeat

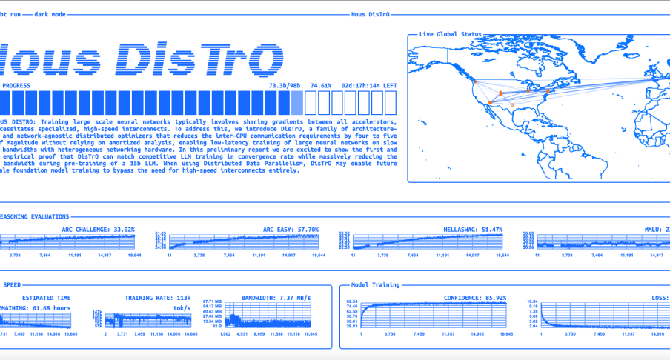

Nous Research is training an AI model using machines distributed across the internet

- AI researchers Nous Research is training a new 15-billion parameter LLM without the need for expensive superclusters or low latency transmission.

- The pre-training process is distributed and livestreamed on a dedicated website.

- More than 75 percent of the pre-training process is completed, as at the time of publication.

- The model is pre-trained by processing extensive text datasets, capturing patterns, grammar, and contextual relationships between words.

- If successful, this novel method opens up new opportunities for distributed AI training and could shift the balance of power in AI development.

- The company's open-source distributed training technology is called Nous DisTrO (Distributed Training Over-the-Internet).

- DisTrO reduces inter-GPU communication bandwidth requirements by up to 10,000 times during pre-training, allowing models to be trained on slower and more affordable internet connections.

- DisTrO's core breakthrough lies in its ability to efficiently compress data without sacrificing model performance.

- Reduction in centralised control brings Decentralised federated learning, training diffusion models for image generation.

- The project may redefine AI innovation with enormous potential applications.

Read Full Article

5 Likes

For uninterrupted reading, download the app