Spicyip

2d

230

Image Credit: Spicyip

Part II- Applying Natural Intelligence (NI) to Artificial Intelligence (AI): Understanding ‘Why’ Training ChatGPT Transcends the Contours of Copyright

- Shivam Kaushik delves into the interaction of Large Language Models (LLMs) with non-expressive parts of copyrighted works in the context of copyright infringement.

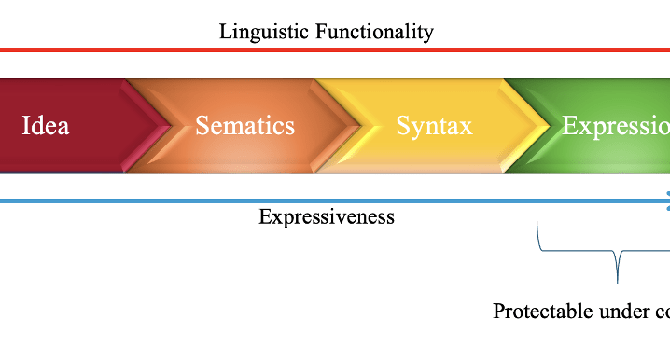

- LLMs during training abstract representations common to tokens generated from text, leading to questions about the protection of 'expression' under copyright law.

- The essence of words, syntax, and semantics in texts is considered non-expressive and falls outside copyright protection.

- Language models compress linguistic information into numeric forms, transcending the traditional boundaries of copyright protection.

- Pre-training processes strip copyrighted works of their original form, focusing on mathematical representations and patterns.

- Certain legal perspectives view non-expressive use as transformative and not infringing on copyright, as highlighted in US court cases.

- Some jurisdictions have exceptions for Computational Data Analysis, while others like India lack specific Text and Data Mining exceptions.

- Debates arise on whether AI understanding of semantic and syntactic information during training infringes copyright.

- The post emphasizes caution in generalizing legal implications of AI in copyright law, advocating for specific analysis and avoiding broad assumptions.

- The discussion focuses on the pre-training stage of LLMs, leaving room for further exploration into fine-tuning, output stages, and related aspects in future posts.

Read Full Article

13 Likes

For uninterrupted reading, download the app