Scand

1M

152

Image Credit: Scand

Prompt Engineering Management System (PEMS): Optimizing Corporate AI Workflows

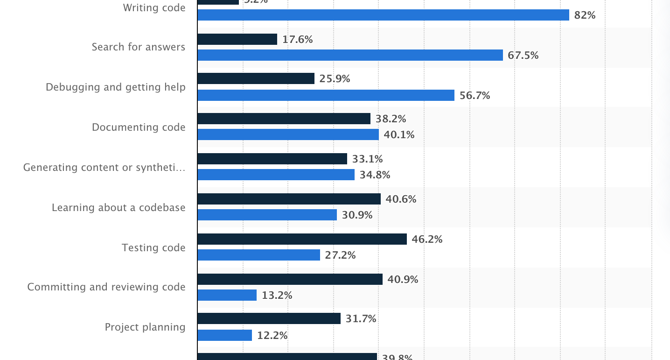

- The rise of AI and machine learning programs like ChatGPT has revolutionized various industries with around one billion users utilizing them for tasks like content creation and debugging.

- However, the quality and structure of prompts used in these AI tools are crucial for successful outcomes, leading to the emergence of Prompt Engineering Management Systems (PEMS).

- PEMS focuses on composing appropriate inputs for Large Language Models (LLMs) to generate desired outputs, ensuring the effectiveness of AI responses.

- Improper prompts can result in incorrect responses, regulatory risks, increased token usage, and unpredictable behavior.

- The global prompt engineering market is expected to grow significantly from 2024 to 2030, highlighting the importance of prompt management in AI workflows.

- Challenges in prompt management include scattered storage, lack of version control, inconsistent tone, duplicate efforts, no testing process, and security risks.

- A PEMS serves as a centralized tool for saving, testing, and improving AI prompts, ensuring high-quality inputs and standardized practices across teams.

- Key features of PEMS include a centralized repository, version control, standardized templates, probing and validation capabilities, access control, and collaboration tools.

- PEMS streamlines prompt quality and consistency by enabling testing, version tracking, collaboration, and feedback integration, ultimately enhancing AI performance.

- Use cases for PEMS include applications in customer support chatbots, internal knowledge assistants, content creation, code generation, data analysis, and training/onboarding processes.

Read Full Article

9 Likes

For uninterrupted reading, download the app