Unite

1M

446

Image Credit: Unite

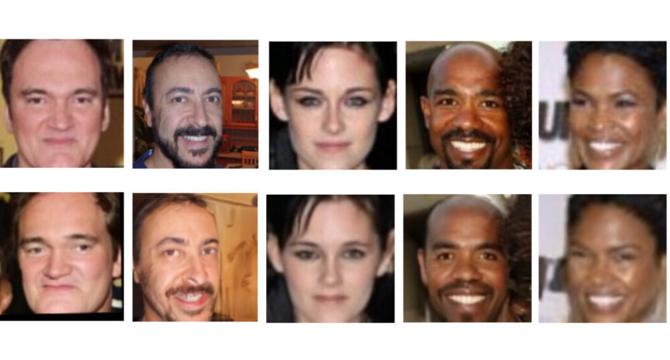

Real Identities Can Be Recovered From Synthetic Datasets

- Studies suggest that synthetic AI-generated datasets containing ‘non-real’ examples, such as images of fake people, can generate personalised data from real sources. This highlights privacy concerns and the potential for the use of copyrighted data despite being derived from AI-generated datasets. The risk, reflected in a new Swiss research paper, lies in the possibility that these synthetic systems could potentially lead to facing lawsuits when finding out that the data used was, in fact, unauthorised, copyrighted, or IP-protected, especially for facial recognition and biometrics. As a result, businesses are training their AI to discriminate high-level concepts without replicating the actual training data to mitigate this risk.

- A recent investigation by the US Copyright Office in 2023 concluded that businesses using generative AI models on legally-questionable grounds could expose themselves to legal ramifications. Studies by Swiss researchers delve into the potential pitfalls of using synthetic datasets, in which examples of fake people, for example, can be synthesised without needing to consider whether the data is legally usable.

- While training AI systems on synthetic data can provide a cost-effective solution to training on copyrighted data, the Swiss researchers claim that the new data retains the trace of the real dataset from which it is derived. Moreover, the researchers found that synthetic datasets contain similar images to that of the original (real) dataset from which it was sourced. Therefore, synthetic generators have memorised training data points which could be used to infringe on privacy rights.

- To avoid this and generate high-level concepts, businesses are training AI systems on realistic examples of fake people, churches, or cats, for example. It can be difficult for trained AI systems to produce intricate details unless it is trained extensively on the dataset, which can lead to memorisation tendencies. However, if businesses set a relaxed learning rate or end training at the stage where the core concepts are ductile, not buried down to the specifics.

- Therefore, researchers generally apply comprehensive training schedules and high learning rates to produce granularity in the AI models. However, even slightly memorised systems can potentially misrepresent themselves as well-generalised, as outlined in a Swiss research paper titled, “Unveiling Synthetic Faces: How Synthetic Datasets Can Expose Real Identities,” which raises concerns about the generation of synthetic facial recognition datasets.

- The Swiss researchers demonstrated the ability to recognise the links between synthetic and real datasets, raising concerns regarding the application of synthetic data in biometrics and face recognition, given the risk of sensitive information leakage and paving the way for generating responsible synthetic face datasets in the future that also address privacy concerns.

- Bloomberg suggested that user-supplied images from the MidJourney generative AI system had already been incorporated into the capabilities of Adobe's Firefly – its text-to-image, and now text-to-video architecture – that is powered primarily by the Fotolia stock image dataset, which Adobe acquired in 2014, supplemented by the use of copyright-expired public domain data. As a result, businesses must train AI models to discriminate high-level concepts without replicating actual training data by using synthetic datasets.

- Though the authors of the research promise to release code for the work, there is no current repository link. The paper is called, “Unveiling Synthetic Faces: How Synthetic Datasets Can Expose Real Identities” and comes from the Idiap Research Institute, the Ecole Polytechnique Federale de Lausanne, and the Universite de Lausanne in Switzerland. It was published on November 6, 2024.

Read Full Article

26 Likes

For uninterrupted reading, download the app