Medium

15h

73

Image Credit: Medium

Retrieval-Augmented Generation and the Transformation of Enterprise Workflows in 2025

- Retrieval-Augmented Generation (RAG) has become crucial for enterprise AI workflows, enhancing accuracy and utilizing proprietary knowledge.

- LLMs like GPT-4 lack relevant industry-specific knowledge due to static training data, leading to outdated or incorrect responses.

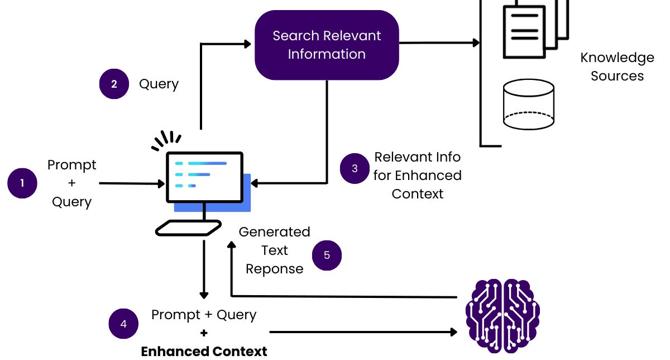

- RAG addresses this by adding a retrieval layer, searching relevant documents to provide up-to-date information for accurate responses.

- RAG enables scalable AI systems by combining LLMs' power with internal data, offering a cost-effective and reliable solution.

- The RAG pipeline involves 5 core steps that enhance accuracy and reliability in AI outputs.

- Use cases for RAG include customer support systems, legal and compliance research, and financial reporting, enhancing efficiency and accuracy in various tasks.

- Challenges of RAG include latency issues, data security concerns, and ensuring relevant document retrieval to avoid inaccuracies.

- RAG sets a new standard for corporate AI applications, leveraging internal data for context-aware and accurate outputs.

- Businesses benefit from utilizing RAG by creating systems that provide better insights, faster development, lower costs, and alignment with evolving business needs.

- RAG-based systems will play a crucial role in the future of AI in businesses, from customer-facing applications to internal decision-making tools.

- Investing in RAG now positions companies to leverage AI effectively and at scale, shaping the future of business AI.

- RAG represents a significant advancement in enhancing AI capabilities for business applications.

- RAG facilitates the utilization of internal knowledge for improved AI system performance and alignment with business requirements.

- The integration of RAG in enterprise workflows enables more efficient and accurate responses based on up-to-date information.

- RAG's ability to provide context-aware, accurate outputs paves the way for smarter business AI applications.

- Businesses investing in RAG now will have a competitive edge in implementing AI solutions that are secure, efficient, and scalable.

Read Full Article

4 Likes

For uninterrupted reading, download the app