Hongkiat

3w

198

Image Credit: Hongkiat

Running Large Language Models (LLMs) Locally with LM Studio

- Running large language models (LLMs) locally with tools like LM Studio or Ollama offers advantages such as privacy, cost-efficiency, and offline accessibility.

- Optimizing LLM setups is crucial due to their resource-intensive nature and the need for efficiency.

- Model selection is vital in maximizing LLM performance, guided by parameters, model characteristics, and resource levels.

- LM Studio simplifies model selection by highlighting optimal models based on system resources.

- Different models like Llama 3.2, Mistral, and Qwen 2.5 Coder cater to various tasks with different architectures and capabilities.

- Quantization, like compressing data for storage efficiency, can be applied to LLMs using lower bit values for memory-saving.

- LM Studio offers quantized models like Llama 3.3 and Hermes 3, allowing for reduced memory usage without significant quality loss.

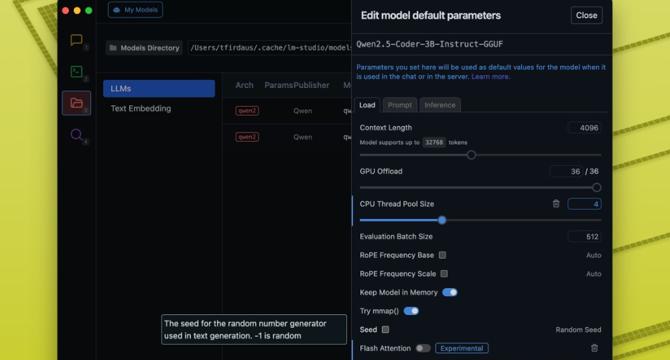

- Performance tweaks in LM Studio include context length adjustments, GPU offload for accelerated inference, and CPU thread pool optimization.

- Settings like cache quantization type and response length limitation balance performance and accuracy in LLM operation.

- By carefully selecting models, adjusting settings, and optimizing LLM performance, efficient and effective local running of large language models can be achieved.

Read Full Article

11 Likes

For uninterrupted reading, download the app