Unite

1M

279

Image Credit: Unite

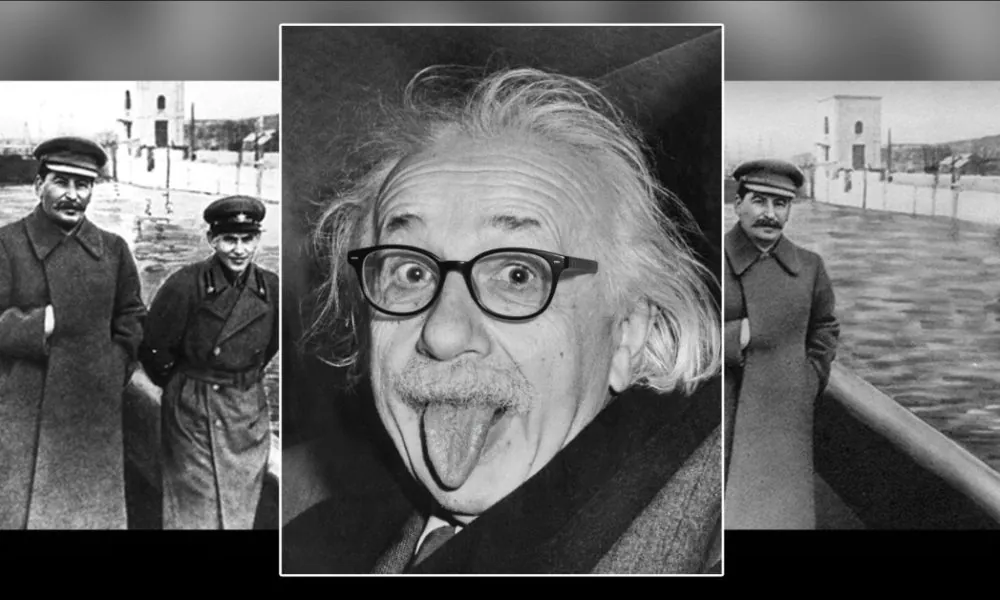

Smaller Deepfakes May Be the Bigger Threat

- Conversational AI tools like ChatGPT and Google Gemini are now used to create subtle deepfakes by rewriting images without face swapping.

- These subtle alterations can trick both AI detectors and humans, making it harder to distinguish real from fake online content.

- People often associate deepfakes with non-consensual AI porn and political manipulation, but subtle deepfakes can have a more enduring impact.

- Researchers created the MultiFakeVerse dataset to address subtle deepfakes, altering context without changing core identities, challenging detection systems.

- Human observers and current detection models struggle to identify these subtle manipulations, showing limitations in detecting narrative-driven edits.

- The MultiFakeVerse dataset was generated using vision language models to subtly alter images and assess perception changes across emotion, identity, and narrative.

- Detection models like CnnSpot and SIDA had challenges in identifying subtle manipulations, with improved performance after fine-tuning on the MultiFakeVerse dataset.

- Gemini-2.0-Flash was used to assess how manipulations affect viewer perception, showing shifts in emotion, identity, narrative, and ethical concerns.

- The study reveals the difficulty in detecting subtle deepfakes, emphasizing the potential long-term impact of quieter 'narrative edits'.

- As AI tools play a role in generating such content, the study suggests a need for improved detection models capable of identifying small, targeted changes.

Read Full Article

16 Likes

For uninterrupted reading, download the app