IEEE Spectrum

1M

265

Image Credit: IEEE Spectrum

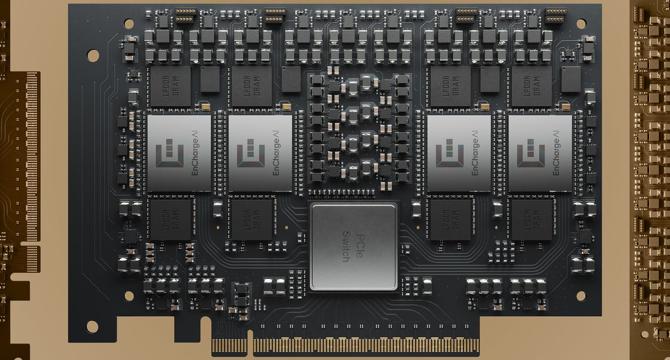

Startup’s Analog AI Promises Power for PCs

- Analog AI, utilizing analog phenomena instead of digital computing, has been a long-standing interest for chip architects but has faced challenges in delivering energy-efficient machine learning solutions.

- EnCharge AI, a startup founded by Naveen Verma from Princeton University, unveiled the EN100 chip based on a novel architecture that promises to significantly improve AI performance per watt.

- The EN100 chip operates by summing up charge instead of current, aiming to tackle the noise issues that have hindered other analog AI schemes.

- By utilizing capacitors for multiplication instead of traditional memory cells like RRAM, EnCharge's solution reduces noise and achieves efficient machine learning calculations.

- The technology behind EnCharge's chip involves switched capacitor operation, a concept old but repurposed for in-memory computing tailored to AI requirements.

- EnCharge has been successful in proving the programmability and scalability of their technology, attracting early-access developers and funding from companies like Samsung Venture and Foxconn.

- Competing in the field of analog AI are established players like Nvidia, which announced its plans for PC and workstation products, as well as other startups like D-Matrix, Axelera, and Sagence.

- EnCharge's innovative approach to analog AI technology aims to enable advanced, secure, and personalized AI operations locally without depending heavily on cloud infrastructure.

- The EN100 chip offers performance benefits, with the startup targeting AI workloads in PCs and workstations with a focus on energy efficiency and improved battery life.

- EnCharge's utilization of capacitors for multiplication operation addresses issues related to noise in analog AI, offering potential for more effective and energy-efficient machine learning solutions.

- Overall, EnCharge's unique analog AI chip architecture represents a significant advancement in the quest for energy-efficient computing for AI applications.

Read Full Article

15 Likes

For uninterrupted reading, download the app