Macstories

1M

255

Image Credit: Macstories

Testing DeepSeek R1-0528 on the M3 Ultra Mac Studio and Installing Local GGUF Models with Ollama on macOS

- DeepSeek released an updated version of their R1 reasoning model (version 0528) with improved performance and reduced hallucinations, compatible with function calling and JSON output.

- Early tests from Artificial Analysis showed increased benchmark performance, ranking it behind OpenAI’s o3 and o4-mini-high in their Intelligence Index.

- The model is accessible in the official DeepSeek API, with open weights available on Hugging Face for download.

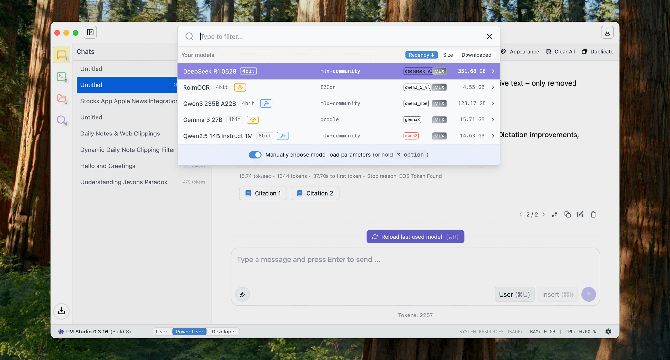

- The article recounts the author's experience downloading quantized versions of the model on their M3 Ultra Mac Studio.

- A 4-bit quant of the full 685B parameter model was tested in LM Studio, requiring a smaller context window to avoid memory issues.

- The article details the process of installing local GGUF models in Ollama on macOS, involving merging multiple GGUF weights into a unified file for use.

- Challenges with loading the full 8-bit version of R1-0528 in Ollama due to its size led the author to try the smaller 4-bit version successfully.

- The author documented the steps of downloading, merging, and installing the GGUF models for local use.

- Despite some hallucinations, the offline model gave accurate information about MacStories, showcasing R1's capabilities and limitations.

- The local installation of the smaller model version demonstrated impressive performance within the author's M3 Ultra Mac Studio setup.

Read Full Article

15 Likes

For uninterrupted reading, download the app