UX Design

1M

27

Image Credit: UX Design

The AI trust dilemma: balancing innovation with user safety

- As AI technology advances, it is crucial to prioritize safety and trust in AI products to address user concerns and build trust in the technology.

- Building AI products that prioritize data privacy and user trust is essential to bridge the existing trust gap.

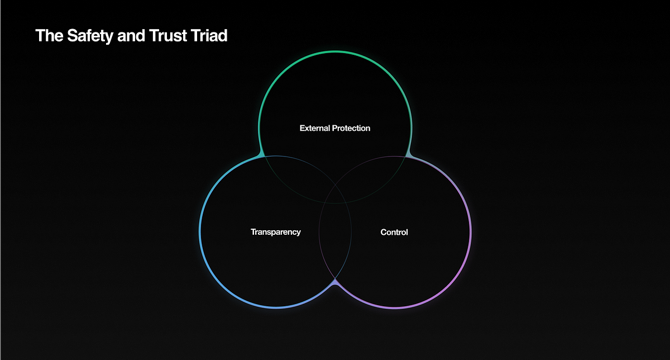

- Key principles for ensuring safety in AI products include external protections, transparency, and user control over their data.

- Legal regulations, privacy laws, and emergency handling procedures are vital considerations in building responsible AI products.

- Preventing bot abuse, conducting safety audits, and having backup AI models in place are essential for maintaining user trust and satisfaction.

- Transparency, clear communication, and open dialogue are crucial for establishing trust in AI systems and maintaining clear boundaries between AI and human interactions.

- Offering users control over their data, including allowing them to decide what information the AI retains and how it is used, is key to building trust and respect for privacy.

- Personalization in AI should be transparent, user-controlled, context-aware, and respectful of user consent to avoid feelings of invasion of privacy.

- Allowing users to store conversations locally, implementing app-specific password protection, and being transparent about data handling practices contribute to establishing trust and respect for privacy in AI interactions.

- Building trust in AI products requires tailored approaches that consider market dynamics, regulations, and user preferences while prioritizing safety, transparency, and user control over data.

- By designing AI systems that prioritize user safety, privacy, and control, we can create technologies that enhance the human experience while earning trust and respect from users.

Read Full Article

1 Like

For uninterrupted reading, download the app