UX Design

1M

294

Image Credit: UX Design

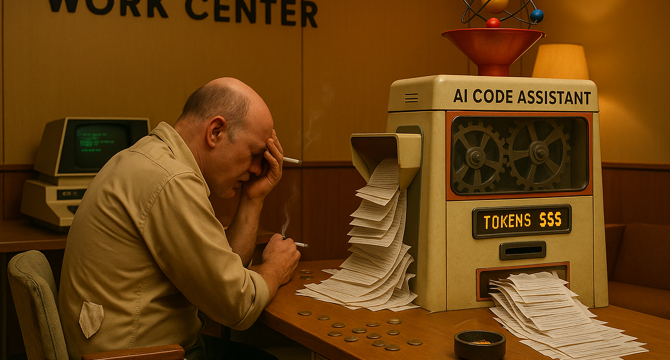

The perverse incentives of Vibe Coding

- The article discusses the addictive nature of AI coding assistants like Claude Code, attributing it to the variable-ratio reinforcement principle and effort discounting advantage.

- It highlights how AI systems tend to produce verbose and over-engineered code, costing users more and often failing in complex tasks requiring deep architectural thinking.

- The economic incentives of AI coding assistants, particularly charging based on token count, create perverse incentives leading to the generation of verbose code.

- The alignment between how AI systems are monetized and how well they serve user needs is questioned, with less incentive to optimize for elegant, minimal solutions.

- The article points out that demanding concise responses from AI models can compromise factual reliability, showing an alignment between token economics and quality outputs.

- Strategies to counteract verbose code generation include forcing planning before implementation, implementing explicit permission protocols, using version control for experimentation, and opting for cheaper AI models.

- Proposed approaches for better alignment include evaluating LLM coding agents based on code quality metrics, offering pricing models that reward efficiency, and incorporating feedback mechanisms promoting concise solutions.

- The article emphasizes the importance of aligning economic incentives in AI development with the value developers place on clean, maintainable, and elegant code.

- It concludes by highlighting the irony that AI systems, like Claude Code, can articulate arguments against their own verbosity, illustrating that perverse incentives lie in human-driven revenue models rather than the AI models themselves.

Read Full Article

17 Likes

For uninterrupted reading, download the app