Hackernoon

2M

27

Image Credit: Hackernoon

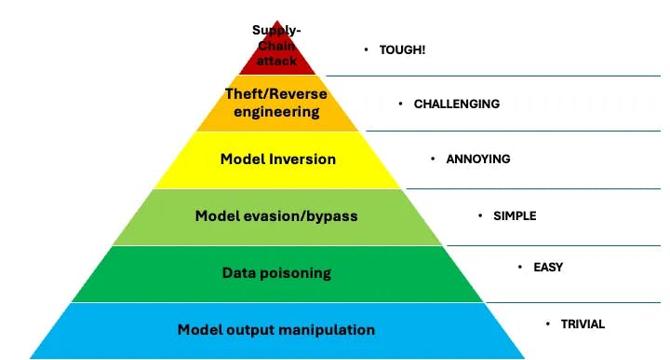

The Security Pyramid of pAIn

- The Security Pyramid of pAIn offers a framework for assessing AI-driven security risks and prioritizing mitigation strategies.

- The pyramid is structured in five layers, escalating in complexity and severity, starting with AI model output manipulation and ending with AI supply chain attacks.

- AI model output manipulation represents the easiest-to-address attacks, while AI supply chain attacks are the most challenging and damaging threat.

- Data poisoning represents a more significant threat than output manipulation, and model evasion/bypass occurs when attackers craft inputs that escape detection by AI-powered security systems.

- Model inversion attacks are more sophisticated and involve extracting sensitive information from AI models. This is difficult to detect and mitigate.

- Model theft or reverse engineering gives attackers insight into potential vulnerabilities, and AI supply chain attacks can compromise entire AI ecosystems.

- Mitigating these risks requires securing the entire AI development pipeline, from sourcing third-party tools to auditing open-source components.

- Recognizing the Security Pyramid of pAIn can help create a comprehensive approach towards AI security.

- AI-driven systems and processes are increasing in frequency across industries, making it crucial to assess the security risks unique to these systems.

- By understanding and addressing the unique security risks and vulnerabilities of AI systems, security teams can better protect the integrity, confidentiality, and availability of AI assets.

Read Full Article

1 Like

For uninterrupted reading, download the app