Dev

1M

375

Image Credit: Dev

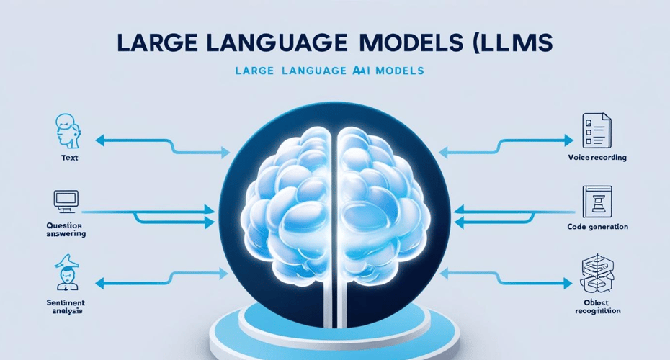

Understanding Large Language Models (LLMs): Types and How They Work

- Large Language Models (LLMs) play a key role in modern AI applications, supporting chatbots, content generation tools, and code assistants.

- LLMs are advanced machine learning models trained on extensive text data, enabling tasks like text generation, question answering, and code creation.

- LLMs come in various types such as Decoder-only Models, Encoder-only Models, and Encoder-Decoder Models, each suited for different tasks.

- Training LLMs involves techniques like unsupervised learning, supervised fine-tuning, and reinforcement learning from human feedback.

- LLMs employ machine learning mechanisms like data preprocessing, transformer architecture, and fine-tuning on labeled datasets for training.

- Different LLMs excel in conversational models, code generation, multimodal models, and domain-specific tasks based on their architectures.

- Real-world applications of LLMs include customer support, content creation, code assistance, healthcare analysis, financial insights, and personalized education.

- LLMs are available as open-source models like LLaMA 2 and Falcon, as well as proprietary models like GPT-4 and Gemini.

- In conclusion, LLMs are transforming AI interactions by offering diverse capabilities through various architectures and training approaches.

Read Full Article

22 Likes

For uninterrupted reading, download the app