Feedspot

1M

86

Image Credit: Feedspot

Understanding LLM Evaluation: Metrics, Benchmarks, and Real-World Applications

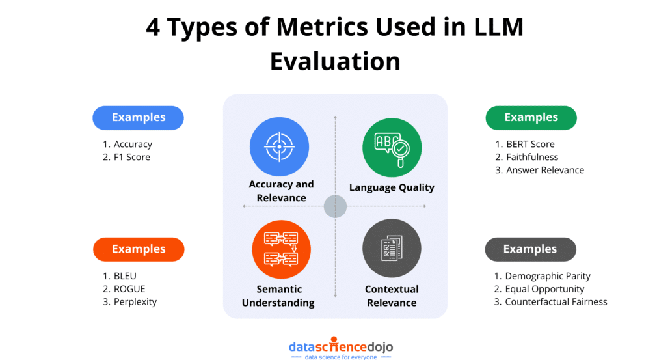

- Evaluation of large language models (LLMs) is crucial to ensure they perform well on tasks they were built for and remain accurate, reliable, and ethical for real-world use. Evaluation involves benchmarking models against a set of standards and then scoring their responses using different metrics to determine areas of strengths and weaknesses in a model's performance. Key benchmarks like Measuring Massive Multitask Language Understanding (MMLU), Holistic Evaluation of Language Models (HELM), and HellaSwag are designed to test various model capabilities and provide insights into a model's versatility, depth of understanding, ethical and operational readiness, and mathematical reasoning and problem-solving skills. Metrics like BLEU, ROUGE, Perplexity, BERTScore, Faithfulness, and Answer Relevance assess the quality and coherence of language, semantic understanding and contextual relevance, and robustness, safety, and ethical alignment. LLM leaderboards rank and compare models based on different benchmarks, providing researchers, developers, and users with a structured way to assess model performance.

- LLM evaluation is essential to ensure that language production is well-structured, natural, and easy to understand and to guarantee that models remain accurate, reliable, unbiased, and ethical for users in real-world situations.

- Evaluation of benchmarks involves measuring model responses against a set of standards to determine areas of strengths and weaknesses and to ensure that models remain accurate, reliable, and ready for real-world use.

- Evaluation benchmarks assess the abilities of models to solve specific language tasks, test their reasoning abilities across a wide range of topics, evaluate vernacular reasoning, and test mathematical reasoning and problem-solving skills and much more.

- LLM evaluation metrics like BLEU, ROUGE, Perplexity, BERTScore assess the quality and coherence of language, semantic understanding and contextual relevance, and robustness, safety, and ethical alignment.

- Leaderboards rank and compare LLMs based on various evaluation benchmarks and provide a roadmap for improvement, guiding decision-making for users and developers alike.

- The evaluation of LLMs is essential for ensuring that these models are well-suited for real-world use. By benchmarking these models and testing their abilities, developers can stay up to date with the latest advancements and create better, more reliable models for everyday use.

- LLM evaluation is a continually evolving field with room for improvement in quality, consistency, and speed. With ongoing advancements, these evaluation methods will continue to refine how we measure, trust, and improve LLMs for the better.

Read Full Article

5 Likes

For uninterrupted reading, download the app