Medium

1M

50

Image Credit: Medium

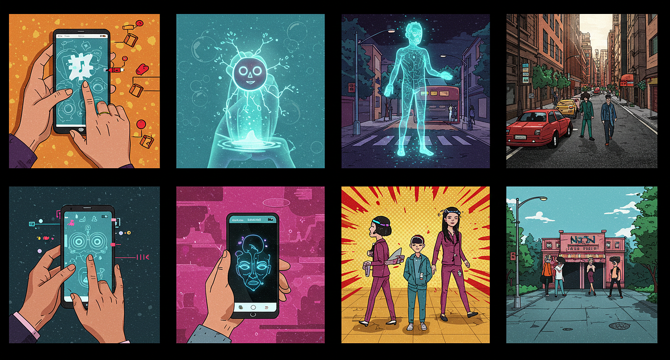

What are Hallucinations in AI? | VBM

- Hallucinations in AI remain a persistent challenge despite advancements in the technology, leading to inaccurate outputs with real-world consequences.

- AI-generated misinformation can mislead users and erode confidence in AI-driven solutions across various fields like journalism, healthcare, and legal proceedings.

- Factors contributing to AI hallucinations include incomplete training data, flawed inference mechanisms, and the inherent limitations of AI reasoning.

- Efforts to mitigate hallucinations in AI include techniques like Retrieval-Augmented Generation (RAG), improved model training, and external verification mechanisms to enhance accuracy, but experts emphasize the need for vigilance and fact-checking by users.

Read Full Article

3 Likes

For uninterrupted reading, download the app