Feedspot

1w

183

Image Credit: Feedspot

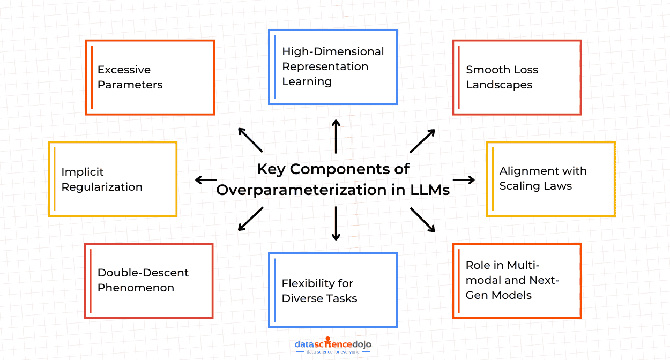

What is Overparameterization in LLMs? From Overfitting Myths to Power Laws!

- Overparameterization is a strategy that allows LLMs to become flexible learners of human language with billions of parameters.

- The concept involves adding more parameters than necessary to a neural network like LLM to fit the training data and represent complex patterns within the data.

- One of the primary challenges of overparameterization is the significant computational resources required for training and inference.

- Another challenge is that overparameterization may lead to overfitting, where the model memorizes the training data instead of learning to generalize from it.

- Understanding the relationship between the model size, data, and compute resources is essential for the effectiveness of LLMs and needs proper attention.

- Overparameterization myths include: overparameterization always leads to overfitting, more parameters always harm generalization, and overparameterization is unnecessary.

- Implications of overparameterization include capturing complex patterns in data, flexible learning, and smoother loss landscapes and better convergence in optimization.

- Overparameterized LLMs can transform various sectors by leveraging their advanced capabilities, such as few-shot and zero-shot learning.

- Efficient and sustainable LLMs are essential, and theoretical insights into overparameterization could lead to significant breakthroughs in developing the models.

- The future of LLMs demands innovations aimed at balancing overparameterization with efficiency and addressing open questions will be vital in shaping the future landscape of AI.

Read Full Article

10 Likes

For uninterrupted reading, download the app