UX Design

2d

340

Image Credit: UX Design

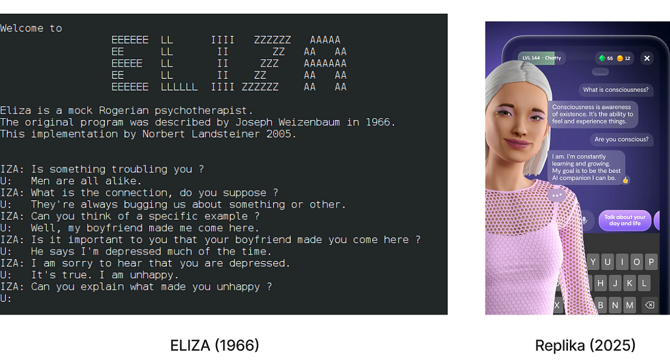

What Replika gets right, wrong, and fiercely profitable

- Chatbots like Replika are taking on roles previously held by friends and therapists, with Replika offering deeper intimacy for a fee.

- The rise of chatbots stems from increasing loneliness in society, with Replika handling over a billion messages annually, aimed at combating feelings of isolation.

- Designing emotional chatbots comes with ethical and legal considerations, especially as roles become more intimate and sensitive data is shared.

- The historical humanization of technology shows how users project emotions onto AI, reinforcing the need for caution as chatbots take on more personal roles.

- While AI therapy bots show promise in alleviating stress, privacy concerns and over-reliance on cheerful language pose risks when it comes to emotional support.

- Guidelines emphasize the importance of design choices, setting user expectations transparently, and prioritizing safety in AI-powered emotional support services.

- Replika's onboarding process shows a mix of good practices and eyebrow-raising moments, like monetizing deeper relationships and offering fantasy character types.

- Maintaining appropriate roles, transparency, safety measures, and user empowerment are key to successful emotional AI service design, avoiding pitfalls of emotional manipulation and privacy breaches.

- Striking a balance between functionality and ethical usage can lead to positive outcomes such as cognitive behavioral therapy benefits without crossing boundaries into questionable relationship dynamics.

- Ultimately, navigating the delicate landscape of emotional AI design involves mindful decisions to prevent exploiting loneliness for profit and maintaining user trust and well-being.

Read Full Article

20 Likes

For uninterrupted reading, download the app