Medium

1w

364

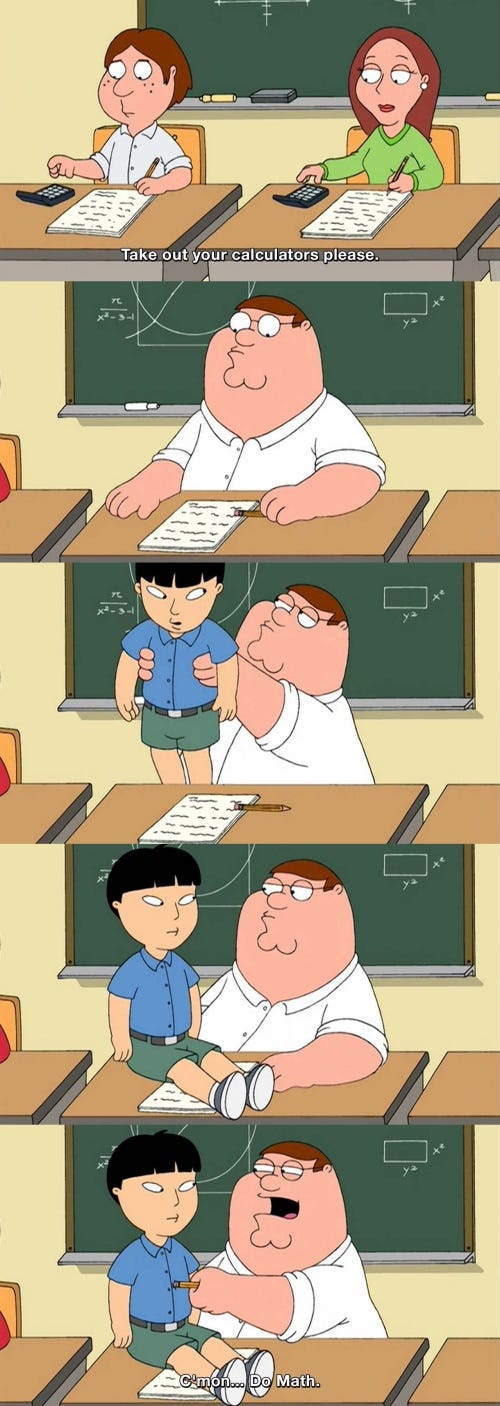

Image Credit: Medium

What Shouldn’t We Ask ChatGPT About Software?

- Sharing entire codebases or significant portions with external tools like ChatGPT in enterprise environments can pose serious risks due to potential exposure of sensitive business logic, API keys, and internal details.

- Loading code into AI tools may violate internal policies, privacy agreements, or data protection laws and may lead to unintentional breaches.

- Developers should avoid pasting full or sensitive project code into AI tools to prevent potential security and privacy risks, especially in commercial or custom applications.

- Asking ChatGPT to perform unethical or illegal activities like exploiting systems through SQL injections or password cracking can pose risks and is not advisable.

- ChatGPT lacks access to local development environments, logs, or system configurations, hindering its ability to diagnose real-time issues accurately.

- Requesting completion of full-scale projects from ChatGPT is a common misuse as it may result in hypothetical answers, promoting incorrect processes.

- Developers should strive to understand the code they write rather than relying solely on ChatGPT, utilizing it as a learning aid rather than a substitute for effort.

- Vague or incomplete code questions to ChatGPT may yield poor-quality responses, necessitating clear, detailed input for effective assistance.

- Using ChatGPT judiciously as a programming aid can enhance skills in software development, emphasizing the importance of understanding and learning rather than blind reliance.

Read Full Article

21 Likes

For uninterrupted reading, download the app