Hackernoon

1w

205

Image Credit: Hackernoon

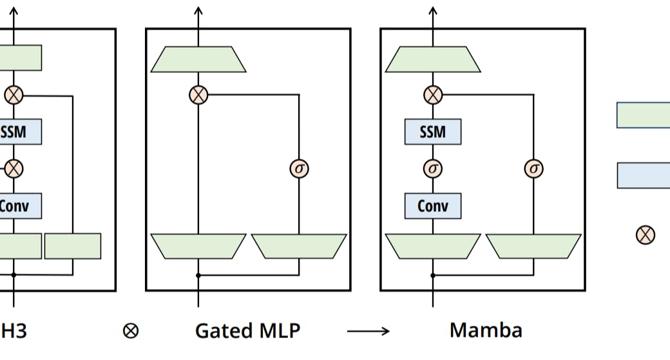

A Simplified State Space Model Architecture

- The paper discusses a simplified architecture for selective state space models (SSMs).

- Selective SSMs are standalone sequence transformations that can be incorporated into neural networks.

- The architecture combines the linear attention block and MLP block into one homogeneous stack.

- This simplified architecture is inspired by the gated attention unit (GAU) approach.

Read Full Article

12 Likes

For uninterrupted reading, download the app