Deep Learning News

Medium

331

Image Credit: Medium

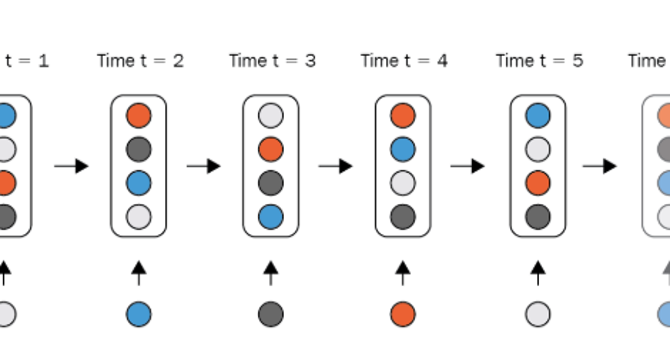

Understanding Activation Functions and the Vanishing Gradient Problem

- Neural networks face the vanishing gradient problem as gradients become very small during backpropagation through many layers.

- Activation functions like Sigmoid and Tanh can lead to gradients close to zero, hindering weight updates in earlier layers.

- Choosing the right activation function is crucial for the performance of deep learning models, with options like ReLU, Leaky ReLU, and Softmax addressing the vanishing gradient issue.

- Understanding activation functions and their impact can assist in designing more effective and accurate neural networks.

Read Full Article

19 Likes

Medium

276

Image Credit: Medium

What I Learned About AI, ML, and DL in One Week

- AI can perform various tasks like OCR, face detection, text-to-speech, sentiment analysis, and language translation.

- Machine learning includes supervised learning, unsupervised learning, and reinforcement learning.

- Deep learning is a subset of machine learning that focuses on neural networks.

- Pros and cons exist for AI, ML, and DL applications, impacting areas like efficiency, bias, interpretability, and resource requirements.

Read Full Article

16 Likes

Fourweekmba

315

Image Credit: Fourweekmba

Google’s Big Sleep AI Agent Achieves Historic First: Stopping a Cyberattack Before It Happens

- Google's Big Sleep AI agent detected and prevented cyberattack, a historic first.

- Developed by Google, Big Sleep intercepted real-world exploit CVE-2025-6965 in SQLite.

- AI agent simulates human reasoning, finds vulnerabilities, and aids in proactive defense.

- AI arms race in cybersecurity anticipated as Google leads innovation with Big Sleep.

Read Full Article

17 Likes

Medium

81

Image Credit: Medium

Ethical AI for Animal Emotions: Ensuring Fairness in Animal Welfare Models

- Ethical data science is crucial for ensuring fairness in animal emotion models by guiding responsible design, development, and deployment of AI systems that interpret animal emotions.

- Addressing challenges like data bias, interpretability, and welfare considerations can transform AI tools into trustworthy allies for animal welfare.

- An example project aiming to decode canine emotions using AI highlighted the importance of ethical data science in improving model accuracy and respecting animals' dignity.

- Fairness in animal emotion models involves empathy, transparency, and collaboration, going beyond algorithms to prioritize animal welfare.

Read Full Article

4 Likes

Medium

65

Curly Dock — The Wild Green Endorsed by Science!

- Curly dock, a wild green, has been endorsed by scientific studies for its health benefits.

- Studies have shown that the juice from curly dock's leaves can eliminate dangerous bacteria like Staphylococcus aureus and E. coli.

- Curly dock is found to be beneficial for treating iron deficiency, containing iron and vitamin C that aids iron absorption, particularly beneficial for women.

- The roots of curly dock have natural anthraquinones with a mild laxative effect, potentially helpful for conditions like hemorrhoids, itchy skin, and eczema. Flavonoids found in curly dock also protect brain cells from stress and may improve memory.

Read Full Article

3 Likes

Medium

377

Image Credit: Medium

What’s New in Generative AI? | Mid-2025 Deep Dive

- Generative AI in mid-2025 offers real-time, synchronous multimodal interaction for users.

- AI agents take autonomous actions like booking travel and managing documents.

- Local, open models gain popularity, enabling personal AI assistants and offline training.

- Video and image generation tools advance, along with global language support in AI.

- AI safety initiatives and integration of AI into everyday tools mark the AI landscape.

Read Full Article

22 Likes

Medium

362

When I Started Meditating, I Finally Understood AI

- Meditation is compared to AI training as both involve learning to pay attention and ignore distractions.

- By meditating, individuals can observe their thoughts as patterns learned over time, leading to increased awareness.

- Meditation helps individuals to make mindful choices by creating a space between stimulus and response.

- Mindfulness can help individuals recognize and hold old biases or scripts with curiosity, leading to increased presence and openness.

Read Full Article

8 Likes

Medium

20

Image Credit: Medium

AI in Textile Dyeing

- AI in textile dyeing is revolutionizing the industry by improving color prediction accuracy and reducing waste, leading to better quality fabrics and a smaller environmental footprint.

- Machine learning models are being used to predict color outcomes more accurately and adjust dye recipes in real-time, addressing age-old challenges in the textile dyeing process.

- The use of AI in textile dyeing has led to a significant reduction in the amount of fabric waste traditionally produced during the dyeing process.

- Sustainable innovations in AI-driven systems are enabling textile manufacturers to achieve vibrant, consistent colors on fabrics with greater efficiency and precision.

Read Full Article

1 Like

Medium

111

Image Credit: Medium

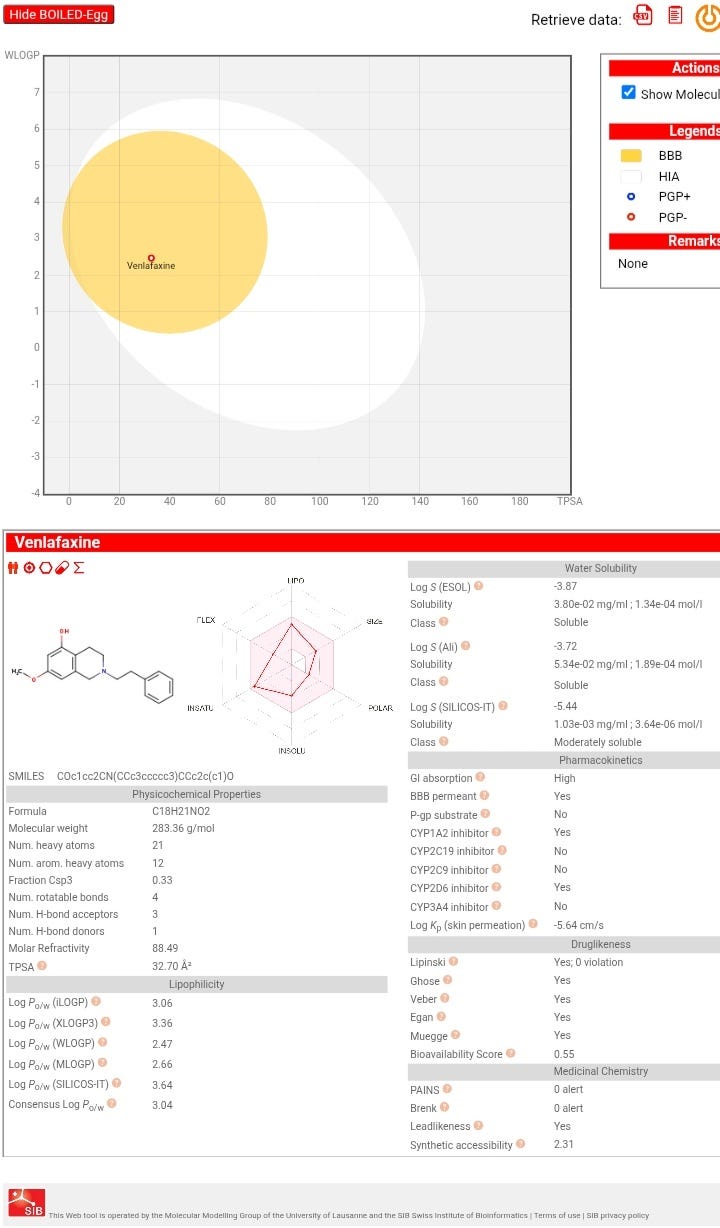

Venlafaxine: assessing the potential for repurposing.

- Venlafaxine, commonly used for depression and anxiety disorders, is likely a serotonin-norepinephrine reuptake inhibitor with potential CNS activity based on its structure.

- Research to validate its biological targets integrates experimental, computational, and multiomics approaches like computational target prediction and high-throughput screening.

- Machine learning enhances prediction of target druggability by integrating diverse protein features, addressing data imbalance, and predicting drug-target interactions for faster drug discovery.

- ML models can predict side effects, prioritize targets for specific diseases, and improve the efficiency of drug development by scoring and ranking targets based on predicted therapeutic effects.

Read Full Article

6 Likes

Medium

2k

Image Credit: Medium

How the Data Science Talent Gap Will Be Narrowed by 2026

- The widening data science talent gap is expected to be addressed by 2026 through a combination of upskilling, industry-education partnerships, and AI-driven automation.

- Organizations have started investing in existing employees by providing targeted training to enhance data science skills internally.

- Educational institutions are collaborating closely with industry partners to align curricula with practical industry requirements.

- AI tools are being increasingly used to automate mundane data tasks, allowing human experts to focus on more complex and strategic aspects of data science.

Read Full Article

24 Likes

Medium

46

Image Credit: Medium

[Module 1.3]: Data Science Foundation — NumPy, Pandas, and Visualization Mastery

- The tutorial focuses on mastering NumPy, Pandas, and data visualization for data science and AI.

- NumPy is highlighted as the mathematical engine for AI computations, optimized for ML algorithms.

- Pandas is emphasized for handling real-world data complexities and preparing data for ML.

- Data visualization is essential for understanding data patterns and preventing errors in AI models.

Read Full Article

2 Likes

Medium

228

VaultsFi Review – Your Gateway to Daily Crypto Profits

- VaultsFi offers an opportunity for daily crypto profits with tracking of returns on a user's dashboard.

- New users receive a $5 signup bonus upon registration, providing them with a head start in crypto earnings.

- Users can access exclusive investment plans tailored for beginners and seasoned crypto users to maximize returns.

- VaultsFi provides a 10-level referral commission system for passive rewards and offers bonuses for engaging with their Telegram Channel.

Read Full Article

13 Likes

Medium

344

Image Credit: Medium

Everything Is Math How artificial Neural Networks and the Internet Are Just Beautiful Equations

- Artificial neural networks and the internet are intricately woven with beautiful equations, functions, and optimizations.

- Neural networks, built on linear algebra, operate through matrix operations, activation functions, and learning through optimization methods like gradient descent and backpropagation.

- The internet functions as a giant mathematical graph, with billions of interconnected web pages, utilized for tasks like ranking by search engines using algorithms such as PageRank.

- Modern technologies, from recommendation systems to language models, heavily rely on mathematical concepts like matrix factorization and embedding to provide functionalities like content recommendations and language understanding.

Read Full Article

20 Likes

Medium

372

Unlocking Real-World Utility from Your 3DOS Dashboard Activity:

- 3DOS is a decentralized operating system with a dashboard for managing data, applications, and services.

- Ways users can unlock real-world utility from their 3DOS dashboard activity include earning rewards, accessing exclusive services, building credibility, and informing decision-making.

- To get started, users should explore the dashboard, set goals, track progress, and engage with the community.

- By following these steps, users can enhance productivity, efficiency, and overall experience through the data and insights from their 3DOS dashboard activity.

Read Full Article

22 Likes

Medium

179

Image Credit: Medium

SLA Gymnastics — Crafting Five‑Nines Commitments on Flaky GPU Supply

- GPU supply chain challenges lead to volatility in availability and preempted instances.

- Five-nines reliability commitment requires fault-tolerant infrastructure and SLA-aware scheduling strategies.

- Multi-region deployment, hardware diversity, and financial planning are crucial for high SLA services.

- Investing in redundancy, real-time synchronization, and risk analysis optimizes reliability vs. cost.

- Mastering 'SLA gymnastics' is key for providers to differentiate and ensure customer trust.

Read Full Article

10 Likes

For uninterrupted reading, download the app