Amazon

1d

42

Image Credit: Amazon

Accelerating Articul8’s domain-specific model development with Amazon SageMaker HyperPod

- Articul8 is accelerating their training and deployment of domain-specific models using Amazon SageMaker HyperPod, achieving over 95% cluster utilization and a 35% improvement in productivity.

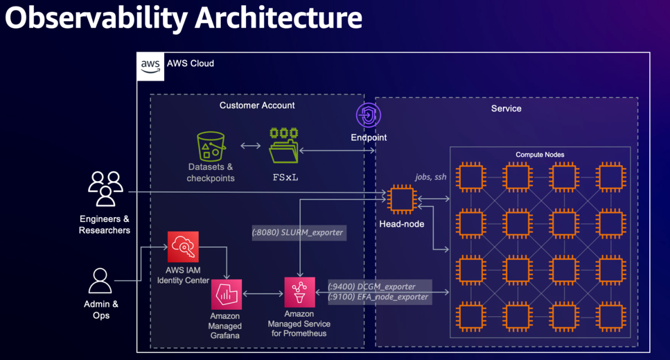

- SageMaker HyperPod provides fault-tolerant compute clusters, efficient cluster utilization through observability, and seamless model experimentation using Slurm and Amazon EKS.

- Articul8 develops domain-specific models for industry sectors like supply chain, energy, and semiconductors, achieving significant accuracy and performance gains over general-purpose models.

- The A8-SupplyChain model achieves 92% accuracy and threefold performance gains over general-purpose models.

- SageMaker HyperPod enabled Articul8 to rapidly iterate on DSM training, optimize model training performance, and reduce AI deployment time and total cost of ownership.

- The platform offers efficient cluster management, automated failure recovery, and observability through Amazon CloudWatch and Grafana.

- Articul8's setup with SageMaker HyperPod and Managed Grafana empowered rapid experimentation, leading to superior real-world performance for domain-specific models.

- The cluster setup includes head and compute nodes, shared volumes, local storage, Slurm scheduler, and accounting for job runtime information.

- Articul8 confirmed the performance of A100 and achieved near linear scaling with distributed training, reducing training time significantly.

- Through SageMaker HyperPod, Articul8's DSMs demonstrated superior performance, accelerated AI deployment time, and lowered total cost of ownership.

Read Full Article

2 Likes

For uninterrupted reading, download the app