Medium

1w

342

Image Credit: Medium

Activation Functions in NN

- The activation function called 'No Activation' simply outputs the given value without any processing.

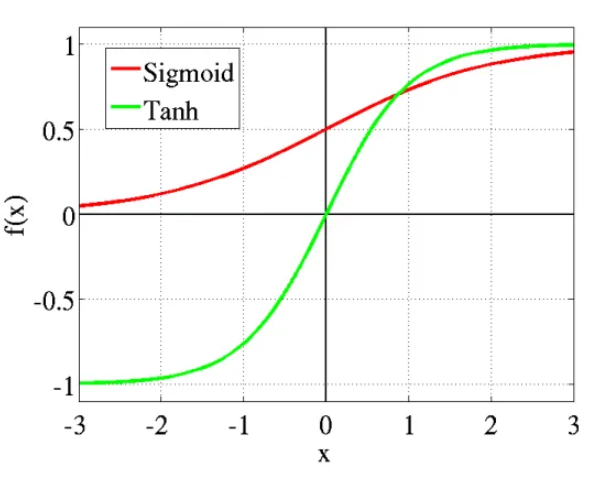

- The Sigmoid activation function is used for binary classification tasks, providing outputs in the range of [0,1].

- The Tanh activation function is used for cases where three output cases are required: negative, neutral, and positive, with a range of [-1,1].

- The Rectified Linear Unit (ReLU) activation function returns the input value for positive inputs, and 0 for negative inputs, making it popular in neural networks.

- The Leaky ReLU activation function is an improved version of ReLU, allowing small non-zero outputs for negative inputs.

- The Softmax activation function is used for multiclass classification, producing a probability distribution over multiple classes.

Read Full Article

20 Likes

For uninterrupted reading, download the app