Towards Data Science

2w

272

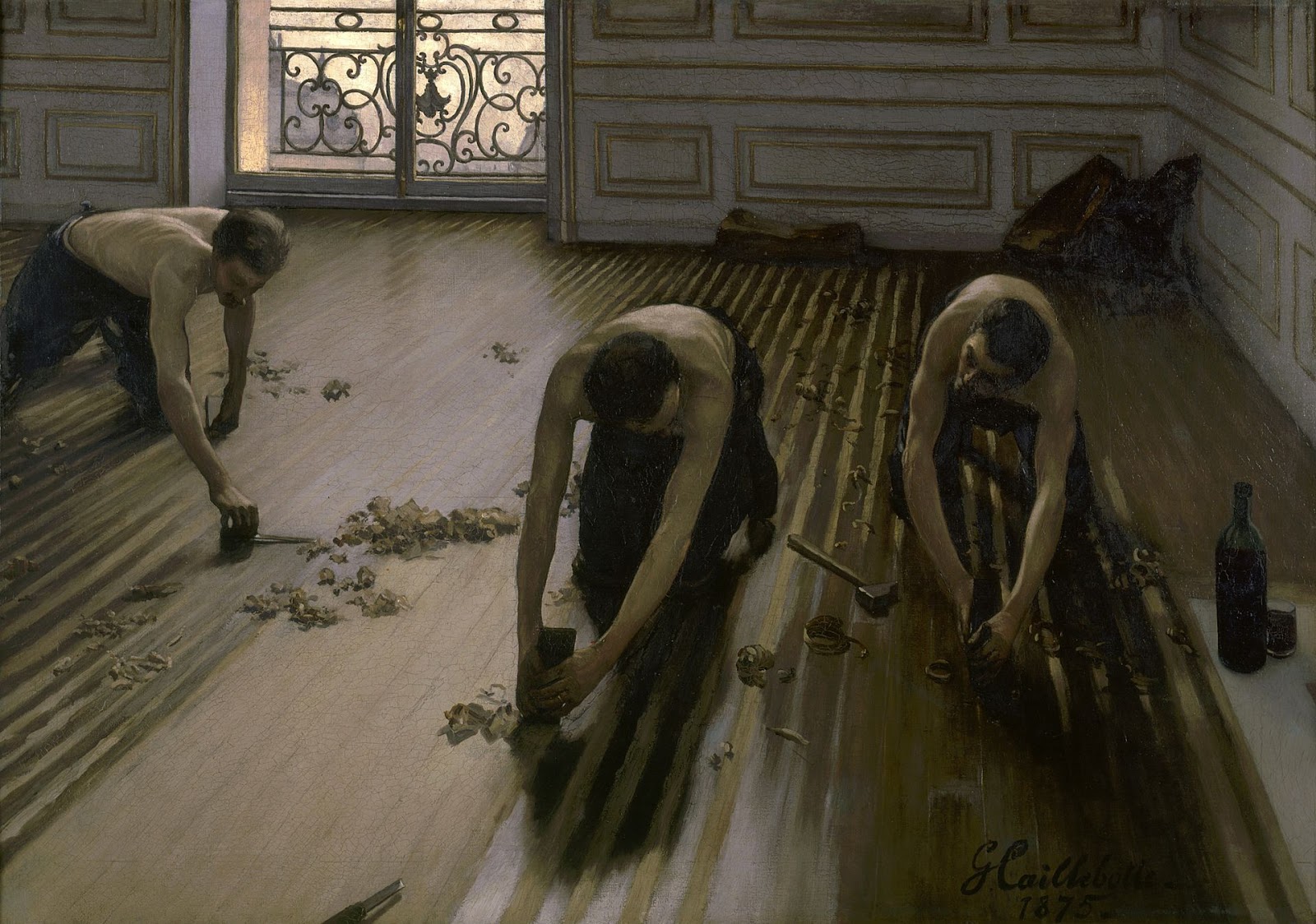

Image Credit: Towards Data Science

Anatomy of a Parquet File

- Parquet files are produced using PyArrow, which allows for fine-tuned parameter tuning.

- Dataframes in Parquet files are stored in a columns-oriented storage format, unlike Pandas' row-wise approach.

- Parquet files are commonly stored in object storage databases like S3 or GCS for easy access by data pipelines.

- A partitioning strategy organizes Parquet files in directories based on partitioning keys like birth_year and city.

- Partition pruning allows query engines to read only necessary files, based on folder names, reducing I/O.

- Decoding a raw Parquet file involves identifying the 'PAR1' header, row groups with data, and footer holding metadata.

- Parquet uses a hybrid structure, partitioning data into row groups for statistics calculation and query optimization.

- Page size in Parquet files is a trade-off, balancing memory consumption and data retrieval efficiency.

- Encoding algorithms like dictionary encoding and compression are used for optimizing columnar format in Parquet.

- Understanding Parquet's structure aids in making informed decisions on storage strategies and performance optimization.

Read Full Article

14 Likes

For uninterrupted reading, download the app