Dev

1w

186

Image Credit: Dev

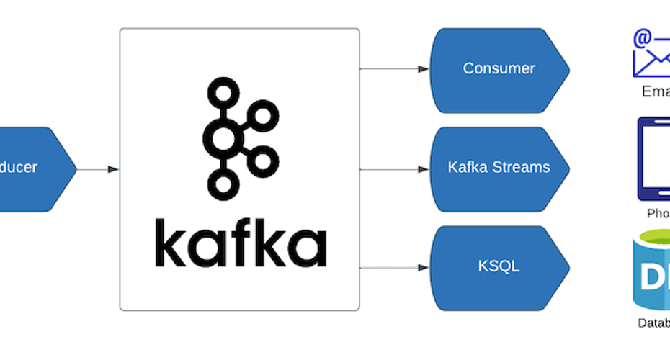

Apache Kafka with Docker

- Apache Kafka, a robust distributed streaming platform, is essential for building dynamic data pipelines and real-time applications. Pairing it with Docker makes setting up Kafka seamless, empowering developers to explore and innovate effortlessly.

- The process of running Apache Kafka with Docker involves creating a Docker Compose file, spinning up the containers, verifying the container status, creating a topic, and producing/consuming messages.

- To create the Docker Compose file, a GitHub repository is provided as a reference. After running the Docker Compose file, the Kafka container can be verified using the 'docker ps' command.

- To create a topic and produce/consume messages, specific commands need to be executed using the Kafka container ID. This guide provides a straightforward way to experiment with Kafka using Docker.

Read Full Article

11 Likes

For uninterrupted reading, download the app